Updated November 26, 2025

A recent Clutch survey found that 84% of consumers want brands to disclose AI-generated photos. But how do you provide AI disclaimers that protect trust without slowing your execution plans?

AI-generated content is everywhere, from product images to social media posts. For your team, the speed of adoption pays off until one unlabeled AI photo or vague "AI" blurb erodes the trust.

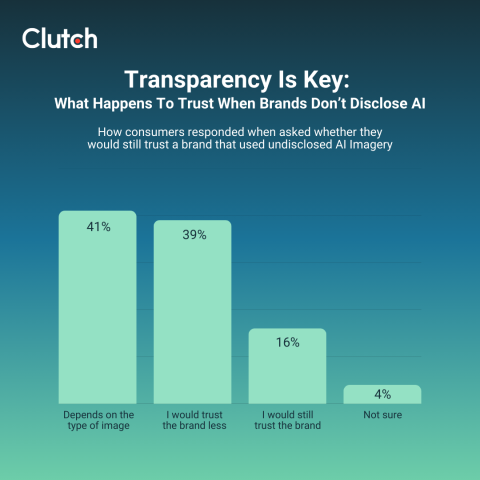

J. Crew's AI product image controversy shows how just a few undisclosed AI photos can cause backlash. In fact, a recent Clutch survey shows that 39% would trust a brand less if there were no disclosure, and 41% said their trust would depend on the type of image.

Looking for a Public Relations agency?

Compare our list of top Public Relations companies near you

However, if you build a clear, visible AI disclaimer playbook, you protect credibility without killing creativity.

Here are five types of AI disclaimer statements, plus tips on creating your own disclaimer that will help safeguard your brand's reputation.

You don't need legalese to be transparent about your use of AI. Simply provide clear labels at the point of experience, with a secondary link to a comprehensive policy for anyone who wants more details. That transparency builds trust, turning your AI disclaimer into a win.

As Josh Webber, CEO of Big Red Jelly, says, "Hiding the use of AI is a form of deception; no matter how minor, it erodes trust. Transparency shows you respect your audience and have nothing to hide."

Here are a few use cases to model your copy.

AI imagery is now standard across marketing campaigns and on-site visuals. When you publish it, say so, right next to the image.

Heinz ran a widely covered Heinz "A.I. Ketchup" campaign built on images made with DALL-E 2. The official campaigns explicitly frame the creative as AI-generated, turning disclosure into the ads' purpose.

If you produce AI visuals for marketing campaigns, make the label part of the creative wrapper.

Burger King's Million Dollar Whopper campaign let people design their own Whopper, instantly generate AI images, and spin those into shareable AI-generated Whopper ads.

The brand used the wording “create a really cool AI-generated Whopper Ad” explicitly in its FAQs and contest flow. That phrasing clearly told customers the burger photo they’re seeing was created by AI, not shot in a studio.

Adobe Stock requires contributors to mark submissions as generative AI, with specific labeling rules and even a "Created using generative AI tools" checkbox. This is a clean reference for your asset library and CMS workflows.

Adobe Stock surfaces AI-generated content directly on image pages. On multiple AI illustration listings, the site places a visible line item, "Generated with AI - Editorial use must not be misleading or deceptive."

Product visuals are especially sensitive because they influence purchase decisions. When images are synthetic, or when you're simulating a try-on with AI, the best practice is to label them accordingly.

Levi Strauss & Co. tested using AI-generated models via Lalaland.ai to supplement human models. In the company's public announcement, it plainly called these assets "AI-generated models," phrasing that your product detail pages can mirror when you use synthetic models or 3D proxies.

Wayfair's Decorify tool lets shoppers upload a room photo and instantly see photorealistic, shoppable redesigns produced by a generative model. The site clearly introduces the mock-ups page as "your shoppable AI interior designer."

That's the kind of straight-talking AI disclosure statement you can mirror on your product mock-ups and early concept drops.

L'Oréal's virtual makeup try-on pages describe the experience as a simulation using augmented reality and artificial intelligence, setting clear expectations that what you're seeing is an approximation.

The try-on tools page clearly says, "It uses augmented reality technology to create a highly realistic virtual makeup application."

Does your brand post blogs or newsletters summarizing news or reviews? Here are a few great examples to tackle disclaimers for such AI uses.

On its news article pages, USA Today preloads a short list of AI-suggested questions. Click one, and a compact, two-to-three-paragraph AI summary opens above the fold, clearly marked as "AI-generated by USA TODAY."

The disclosure sits at the top of the module, not buried in a footer. The larger rollout is DeeperDive, an AI answer engine that USA Today and parent company Gannett deployed site-wide in 2025.

Amazon labels review summaries directly on product pages and explains the feature publicly, making it clear the paragraph is "AI-generated from the text of customer reviews." The disclaimer helps customers understand they're reading an AI-generated summary of multiple reviews rather than editorial or seller-written text.

Newsletters

OpenAI's new ChatGPT Pulse delivers a daily, personalized brief as a set of visual cards generated from users' chats and memory.

In OpenAI's announcement and Help Center, the experience is explicitly framed as "AI research done overnight and shipped in the morning," with a prominent "preview"/limitations note that Pulse "won't always get things right."

That framing functions as the primary disclosure: You're consuming AI-generated summaries inside ChatGPT, with safety checks applied and clear boundaries around what the system does and doesn't do.

If your site search or recommendations use a conversational model, say so clearly in the UI layer or on terms pages.

UN Women labels its AI search directly on the search experience and uses a clear, two-part disclosure: a short, in-context prompt plus a full modal disclaimer. For example, its full disclaimer clearly says, "This AI-powered search is provided by UN Women as a tool to assist users."

Expedia labels its conversational AI agent with clear, in-experience disclosures and a formal terms page that spells out exactly how AI-generated suggestions work. It says, "Responses you receive while using AI Agent are generated by a machine and not written by a human."

Expedia's disclaimer also clearly names the generator ("AI"), ties it to recommendations (hotels, flights, activities), and sets accuracy/availability expectations inside the experience. This approach is beneficial if your product pages or chat modules surface AI-driven suggestions before checkout.

This type of disclaimer applies to any text visible to customers, including captions, headlines, and product descriptions.

YouTube sets a pragmatic benchmark for disclosure. Its policy requires creators to label "meaningfully altered or synthetically generated" videos when the content appears realistic, i.e., something a viewer could mistake for a real person, place, scene, or event.

The label "Altered or synthetic content" surfaces in the description. For sensitive categories (health, news, elections, finance), YouTube may also place a more prominent on-player label.

If you want a clean, on-creative disclosure, Coca-Cola's 2023 holiday spots are the reference point. The ads prominently display the tag "Create Real Magic" and clearly mention "artwork with the power of DALL-E and GPT."

The Google Merch store provides an easy-to-follow best practice for clean, on-product page disclosures that scale across SKUs. The AI disclaimer label says, "This product description was generated using Gemini AI." Also, it prominently sits inside the description, so there's no ambiguity about authorship.

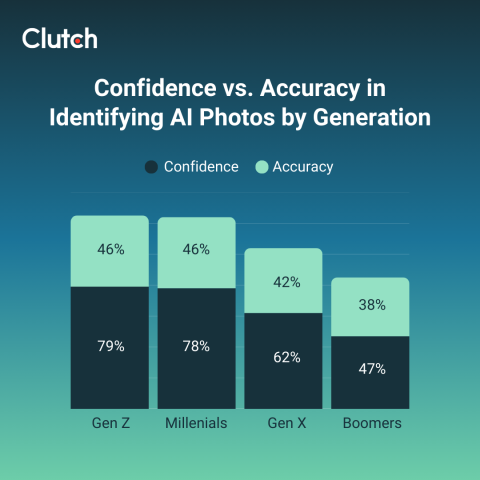

Most people can't reliably tell AI visuals from real photography. In the Clutch survey, 57% of consumers failed to identify AI-generated photos, even though 66% said they felt confident beforehand. That confidence gap is where mistakes creep in and trust slips.

Consumer trust is becoming increasingly fragile in this AI era. Thirty-nine percent of consumers say they would trust brands less that don't disclose. Another 41% say "it depends," which means context and execution matter.

Most importantly, there's not much downside — an AI disclosure statement doesn't automatically hurt conversion. For product imagery, 42% said AI usage had no impact on purchase likelihood, and 33% felt more positively. Again, it signals that how you use and label it matters as much as the tech.

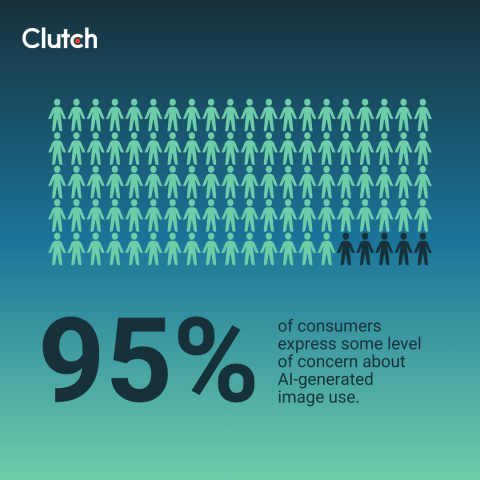

However, concerns are real: 95% reported some level of worry about AI-generated images, particularly regarding deception (71%) and authenticity (65%). The good news is that clear disclosures defuse both worries.

David Gaz, Managing Partner of The Bureau of Small Projects, warns, "If brands don't disclose AI imagery, they risk losing the trust of their customers and their audience. Trust is currency right now and in short supply." Think of disclosure as preventative care for your brand: visible, simple, and effective.

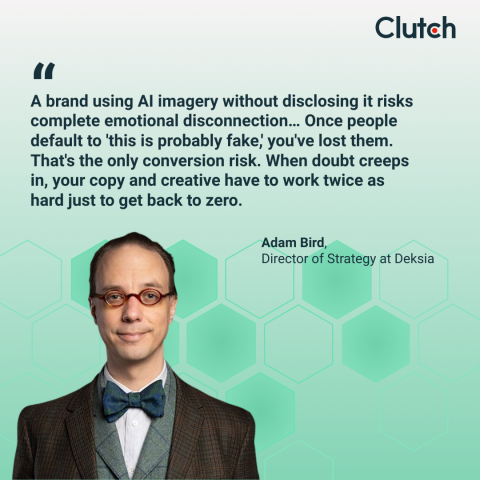

Adam Bird, Director of Strategy at Deksia, agrees: "A brand using AI imagery without disclosing it risks complete emotional disconnection… Once people default to 'this is probably fake,' you've lost them." That's the only conversion risk. When doubt creeps in, your copy and creative have to work twice as hard just to get back to zero.

Now, let's look at a few actionable best practices you can start implementing today.

Skip euphemisms and instead simply say, "AI-generated image" or "AI-assisted recommendation." Industry jargon or over-explaining might alienate many potential target customers.

AI disclosures should closely tie with your brand voice:

The goal should always be clarity with brand identity.

Place AI disclaimers where attention is already drawn, such as in image captions, card footers, section headers, tooltips on icons, and a persistent "AI" tag in chat headers. Then, back it up with a visible policy page.

If you label AI images on your blog but not on product pages, customers may notice the discrepancy. Also, use the same phrasing and placement conventions across ads, site, emails, and socials.

Tie it to honesty, respect, and transparency in your communications and trust pages.

For clarity and depth, structure your disclosure in two parts:

This way, you balance clarity in content with full transparency for customers who want details.

If you use AI in public-facing work, make disclosure part of the craft, not an afterthought. Clear, visible AI disclosures tell buyers you respect their judgment. They also give your teams guardrails to scale AI use across campaigns, ads, websites, and newsletters, without creating confusion.

Put the AI labels where they matter and keep the policy one click away. That rhythm protects your reputation while you keep shipping innovative products and services.

If you want a faster path, partner with a branding agency that has already operationalized AI disclosures. Begin your shortlist with vetted top branding agencies on Clutch, and start using and disclosing AI responsibly for your brand.