Updated January 29, 2026

Many consumers rely on wearable devices like fitness bands and smartwatches to track sleep patterns, count steps, monitor calories, and measure other health indicators. While this data can empower healthier lifestyle choices, it is also deeply personal, making it especially valuable and attractive to threat actors, advertisers, and other third parties.

Many consumers are aware of these risks. According to Clutch data, 74% are concerned about the security of their wearable data, but only 58% feel confident their data is protected.

Looking for a IT Services agency?

Compare our list of top IT Services companies near you

Just how secure is user data on wearable tech devices? The answer depends on several factors, including what data is collected, how companies store and share it, and which regulatory frameworks govern consumer health information.

This guide explores why wearable data is so sensitive, how current industry practices affect consumer trust, and what companies and users can do to better protect personal information.

Search for wearable app developers on Clutch.

Wearables collect a wide range of data points, including heart rate, sleep duration and quality, step counts, location data, and other biometric signals. Some devices also gather information related to menstrual cycles, stress levels, exercise intensity, and dietary patterns.

Over time, this data creates a detailed, continuous record of a user's daily habits and physical state. Because these patterns are inherently identifying, especially when multiple data points are combined, they're difficult to fully anonymize.

For instance, a wearable could collect information about a user's periods over the years, when they're most likely to be fertile, whether they're using birth control, how many calories they burn on average in a day, and how their mood changes from day to day.

How wearable data is collected and stored makes it even more sensitive. Many devices continuously transmit data to cloud-based servers for personalization, analytics, and long-term tracking. Without strong security controls and transparent data-handling practices, this ongoing flow of information increases the risk of unauthorized access, breaches, or information misuse.

Wearable technology has raised multiple ethical and transparency concerns about how user data is collected, shared, and governed.

One major issue is opaque data practices. Many wearables store and share data with cloud services and third-party partners, with minimal clarity about where that data goes or how it's ultimately used.

According to a study analyzing the privacy policies of 17 leading wearable technology manufacturers, high-risk ratings were the most common for transparency reporting (76%) and vulnerability disclosure (65%). Certain brands, including Xiaomi and Wyze, had the highest cumulative risk scores, while others, such as Apple and Polar, ranked lowest, with less risky, more transparent policies.

Another concern is the lack of meaningful consent. During device setup, users are frequently presented with long, complex privacy policies that are difficult to understand. To get past the screen and start using their new purchase, many scroll through these documents and check "agree" without fully digesting what they're consenting to.

Finally, wearables pose re-identification risks, since even “anonymized” data can sometimes be traced back to individuals. Research has shown that correct identification rates range from 86% to 100% in certain datasets. In some cases, as little as 1 to 300 seconds of health-related sensor data was enough to enable re-identification from sources previously assumed to be non-identifying.

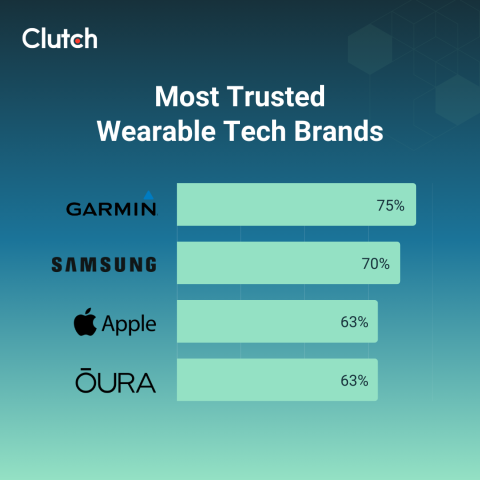

Despite widespread concerns about data privacy, consumer trust varies significantly across wearable tech brands. Survey data shows that 75% of consumers trust Garmin, 70% trust Samsung, and 63% trust Apple and Oura.

Higher trust reflects a mix of factors, many of which revolve around transparent business practices and ethical communication. Research has shown that when brands are more transparent about how they operate, particularly around data handling and privacy, they are more likely to build stronger consumer trust over time.

In the wearable space, the transparency that earns trust can involve:

At the same time, higher consumer trust doesn't always mean users actually understand how their data is managed. In fact, 67% of the American public say they understand little to nothing about what companies are doing with their personal data, suggesting that trust may often be based on perception rather than detailed knowledge of data practices.

Although wearables continue to be popular, a few high-profile incidents have undermined consumer trust in wearable tech. These cases show how wearable tech location vulnerabilities, legal challenges, and data reliability issues can cause serious privacy and reliability issues without adequate safeguards.

In 2017, the fitness-tracking app Strava published aggregated heat maps showing its users' activity patterns. The visualizations unintentionally revealed sensitive locations, including secret military bases. This incident showed how location data collected through wearables can expose far more than individuals may expect.

Legal challenges have raised questions about wearable tech's data reliability and honesty in marketing. A class action lawsuit that concluded in 2020 alleged that Fitbit's sleep-tracking features overstated their accuracy, raising questions about whether consumers were misled about the reliability of the data they relied on for health insights.

As consumers become more aware of data privacy issues, wearable companies have started taking different approaches to managing user data.

Many wearable companies monetize user data directly and indirectly through partnerships with advertisers, analytics providers, and other third parties. While some brands disclose these practices in privacy policies, the level of transparency varies widely.

In many cases, users are not clearly informed about what data is shared, how it's used beyond core product functionality, or how long it's retained. This lack of clarity can make it hard for users to assess the risks they're taking by using a wearable.

Some brands have responded to data privacy issues by including privacy as a product feature. According to Apple, its products and features include innovative privacy techniques and technologies designed to minimize how much user data the company or anyone else can access.

An example is Apple Intelligence, which prevents Apple from fully accessing data by enabling on-device processing. Private Cloud Compute only sends data relevant to a user's task to Apple's cloud servers, and also uses cryptography so that Apple devices will refuse to talk to an Apple server unless it has been publicly logged for inspection.

To build trust, wearable companies can follow several best practices:

Together, these practices reflect a shift toward more intentional data governance, offering companies a clearer framework for reducing privacy risks and building consumer trust over time.

Given the many risks of using wearables, consumers are naturally looking to the law to protect themselves.

However, the U.S. has weaker federal protections for health data than the European Union. The Health Insurance Portability and Accountability Act (HIPAA), a well-known privacy law that focuses on HIPAA-covered entities like hospitals and health plans, doesn't typically apply to wearable companies. This means that outside of the 20 states with data privacy laws, wearable companies aren't federally required to protect health data or restrict its sale.

In response to these gaps, over 20 states have started strengthening data privacy protections for consumers. State laws now increasingly require opt-in consent for certain types of data collection, give users the right to access or delete their personal information, and provide legal pathways for consumers to challenge misuse of their data.

At the federal level, proposals like the Health Infrastructure Protection Act (HIPRA) would extend privacy protections to health apps and wearables by covering all "applicable health information." This information is defined to include any data that identifies an individual and relates to health status, payment, or care, regardless of whether the data originated with an HIPAA covered entity. In practice, the regulation would apply to any entity that determines the means and purpose of processing applicable health information, as well as its service providers that process applicable data on its behalf.

As regulatory protections continue to evolve, wearable users can take several steps to better understand and manage how their data is handled:

While these actions can't eliminate privacy risks entirely, they give users greater visibility and control over how their wearable data is collected and shared.

Wearable devices offer a bevy of fascinating and helpful insights into your habits and health. At the same time, they raise important questions about how wearable companies and third-party partners may collect, share, and protect — or fail to protect — personal data.

As wearables become more deeply integrated into healthcare, fitness, lifestyle, and daily decision-making, the stakes around data protection continue to rise. Reflecting the growing public awareness of problems such as location vulnerabilities, legal challenges, and data reliability, policymakers have started pushing for stronger protections and more transparent data governance practices.

Even with improved regulations, users still need to remain cautious and intentional about how they use this technology. Wearable brands that demonstrate secure, transparent data handling can earn consumer trust.