Updated February 23, 2026

In today’s hyper-competitive environment, companies are racing to release features and products faster than their competitors. As a result, many are turning to generative AI tools, like GitHub Copilot and ChatGPT, to streamline development and shorten time-to-delivery. These tools can rapidly write complex functions and even large blocks of code, which can reduce the workload for many developers.

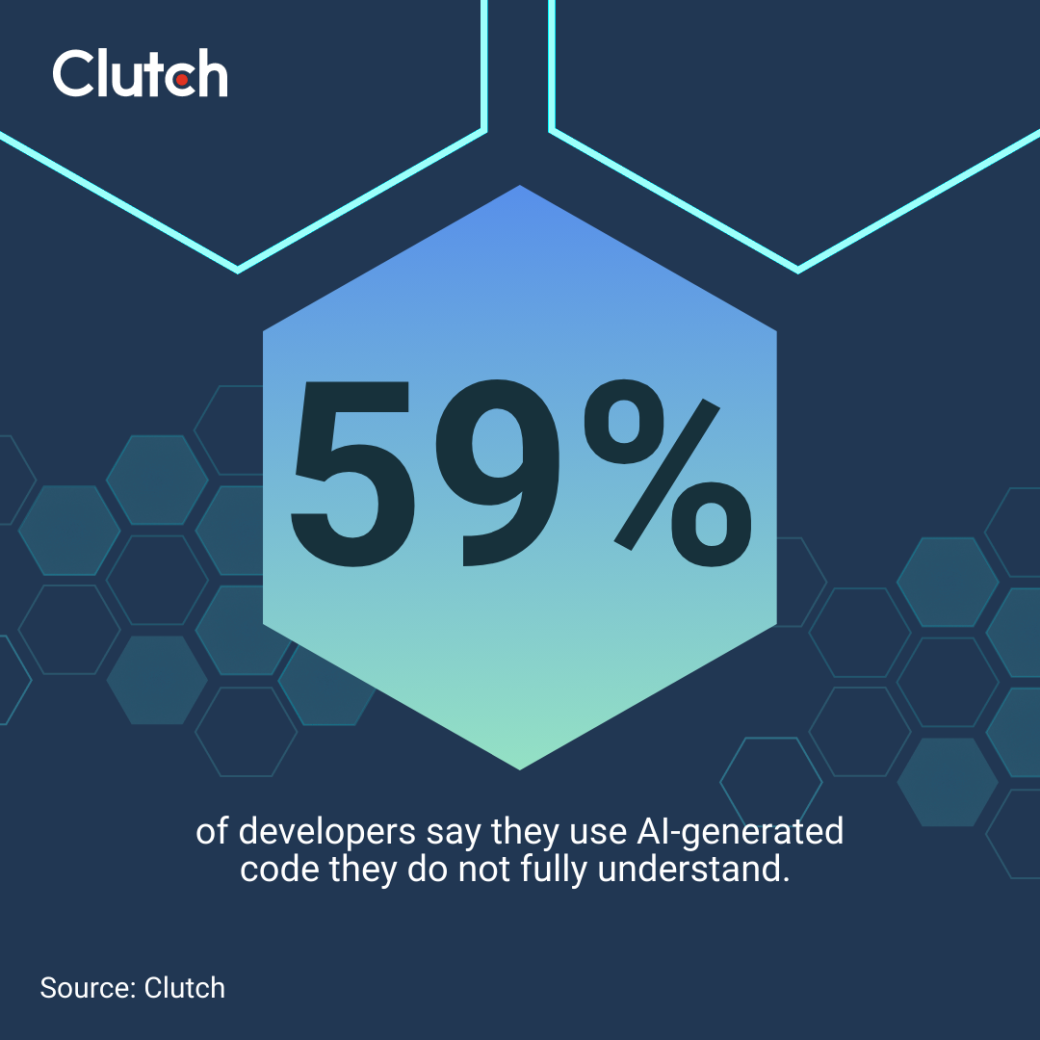

But beneath the push for hyper-efficiency, real concerns are beginning to emerge. A new Clutch survey of 800 software professionals conducted in June 2025 found 59% of developers say they use AI-generated code they do not fully understand.

Looking for a Software Development agency?

Compare our list of top Software Development companies near you

This data highlights a serious problem: trusting code without comprehension can lead to poor code quality, security vulnerabilities, and compliance concerns. It’s a significant risk management issue that CIOs and other tech executives can’t ignore.

As AI becomes a bigger part of the development process, software developers are trusting AI outputs without considering the consequences of producing code that hasn't been checked.

While development teams feel the squeeze of ever-tighter delivery windows, AI-generated code is an easy stopgap. Now, a developer can produce complex, functional code in minutes instead of hours. This trade-off for time is compelling when delivery is in immediate jeopardy.

Yet, trusting code without fully grasping how it works can introduce hidden bugs, security vulnerabilities, and long-term maintenance challenges. Studies show that AI-generated code isn't 100% accurate. In fact, 38.8% of the 1,689 programs created by GitHub Copilot contain security flaws that make them vulnerable to exploits. In another study of 452 real-world code snippets created by GitHub Copilot, 32.8% of the Python code snippets and 24.5% of the JavaScript code snippets had security flaws.

This points to a general need for careful manual review of code generated by models, which can be difficult if developers don't fully understand the code.

In addition to creating security vulnerabilities, a lack of understanding can create ongoing issues in the development and maintenance process. Troubleshooting becomes significantly more difficult when no one on the team can confidently explain its behavior. Over time, this can lead to an accumulation of technical debt, slowing down future development and increasing the risk of costly errors. It also raises concerns about accountability. When no one fully understands the code, it's unclear who is responsible for ensuring its quality and safety.

While AI can accelerate productivity, blindly trusting its output carries serious long-term risks.

AI-generated code can often create package hallucinations, which is an LLM generates dependencies that don’t actually exist, inventing package names that sound plausible based on patterns they’ve seen in real code.

To a developer moving quickly, these suggestions can look legitimate enough to install without a second thought.

This behavior opens the door to a supply-chain attack known as slopsquatting. When attackers notice that AI tools frequently hallucinate certain package names, they can proactively register those names in public repositories and publish malicious code under them.

If a developer then blindly installs the AI-suggested dependency, they may unknowingly introduce malware or vulnerabilities directly into their application. What begins as a harmless hallucination becomes a real security incident.

AI's limitations give rise to several categories of risk for development leaders as the use of AI-generated code is applied to production systems.

AI code can be quick and dirty, focusing on functional output rather than quality. This can lead to subpar, spaghetti code that, while operational, lacks professionalism and elegance. The issue stems from how AI models are trained, optimized for short-term results rather than long-term code health. As a result, AI-generated code can become more fragile over time, harder to change, debug, or extend.

One common problem is code bloat, where AI solutions are verbose and longer than necessary. Another issue is the presence of anti-patterns, where the AI uses a learned solution in the wrong context, technically working but against best practices. Inconsistent logic also poses a challenge. AI might generate functions that perform similar tasks differently within the same codebase, complicating the entire application and increasing technical debt.

Security is the area where loss of trust in AI can have the most harmful effects. AI systems trained on public code repositories have faced exposure to countless examples of security vulnerabilities. They may not reproduce the same vulnerability exactly, but the patterns will be reusable in other contexts.

SQL injection is one class of vulnerabilities that appears in database queries composed with the help of AI. SQL injection vulnerabilities are common in code where the system is concatenating user input into SQL strings instead of parameterizing it.

Hardcoded secrets are another class of vulnerability that commonly gets generated when AI writes authentication code with API keys or passwords included. These credentials should instead be in an external configuration file and not in the codebase.

Flawed authentication logic can be one of the most dangerous vulnerabilities in enterprise software. AI-generated authentication flows can be convincing and work in many cases but have hidden ways to bypass or errors in session management that a motivated and capable attacker can exploit. These flaws are sometimes missed by automated scanning because they are business logic flaws rather than pattern-based vulnerabilities.

Compliance and liability risks arise when AI-generated code causes system failures, data breaches, regulatory violations, or mismanagement. Legal uncertainty exists regarding responsibility, and copyright and licensing issues pose immediate legal risks. AI models trained on open-source code may inadvertently replicate copyrighted functions or breach specific licensing requirements, potentially triggering intellectual property lawsuits. The legal status of AI-generated derivatives of copyrighted code remains a gray area.

Compliance with regulatory requirements is another critical risk factor. Industries such as finance, legal, health care, and government are heavily regulated. These regulated industries typically have stringent requirements for audit trails and accountability of all code deployed in production systems. AI-generated code introduces complexities in meeting these compliance obligations, as organizations may struggle to prove the reasoning and review process behind the AI-generated code.

AI integration in development also presents concerns beyond quality and security. Developer skill erosion is a potential issue. Heavy reliance on AI tools could weaken core programming abilities. For junior developers with a limited experience base, this risk is particularly acute. Developers with a solid programming foundation can better judge AI-generated code, recognizing issues and making informed decisions.

By contrast, junior developers increasingly depend on AI, leading them to adopt mistakes and poor practices as the norm. In a feedback loop, they tend to trust AI over their own intuition because they lack the foundational skills to evaluate its output. Junior developers lacking understanding of concepts like algorithm complexity, memory management, scalability, and security may struggle to identify well-written code.

Like with GPS navigation, while AI tools are helpful, over-reliance can erode core abilities. A developer who outsources all design to AI may lose the skills to conceptualize complex systems or innovate effectively.

For all these reasons, though, AI coding tools can be beneficial to your workflow when used with the right safeguards and processes in place.

AI-generated code should face the same reviews as handwritten code would: peer review, integration testing, manual QA, security scanning, and so on. Teams should set up specific criteria to look for common mistakes made by AI systems. This includes things such as security anti-patterns, error handling, edge cases, performance, and other considerations. Static analysis, linters, and other tools can help catch many of these, but some higher-level decisions are best done by humans, such as architecture and complex security. Contract testing against real APIs — not just mocks — helps catch phantom methods or functionality that the AI may have hallucinated. This ensures that tests reflect reality, not the assumptions of a model, and reduces the risk of introducing subtle errors or vulnerabilities.

Pair programming can also be valuable with AI-generated code. Two developers who are reviewing the output of an AI model are more likely to spot problems. They can share insights on how the AI works, including the things that it tends to get wrong. The same is true for junior developers learning to be critical of the suggestions they receive from an AI.

Working in pairs or small teams encourages shared understanding of AI-generated code and increases the likelihood of catching hallucinations or logic errors early. Collaborative development also helps validate mock implementations against actual system behavior, reducing the chance of incompatible mocking hiding real bugs. By combining human insight with AI assistance, teams maintain quality and security while still leveraging the speed benefits of AI tools.

Team sessions for developers using AI tools can provide a platform for the sharing of successes and lessons learned from mistakes. Explaining both when something is working and how to solve problem cases can help create organizational wisdom with AI.

There needs to be a cultural shift towards understanding and appropriately validating AI-generated code, backed by incentives and leadership that values quality over quantity. Developers also need training in how to evaluate AI tools, including their ability to produce secure, high-quality code and architectural impact.

“Zero trust” is framework treats all AI-generated outputs, including code suggestions, dependency recommendations, and API calls, as untrusted until explicitly verified, similar to how traditional Zero Trust security assumes no actor or system is inherently safe.

This requires developers to validate every AI suggestion before it enters a codebase. This includes checking that dependencies exist in official repositories, confirming that API calls match real interfaces, and scanning for potential vulnerabilities or malicious packages. By verifying each output, teams can safely leverage AI assistance without blindly trusting it.

Implementing a zero trust framework also means integrating verification into standard workflows. Continuous integration pipelines can automatically test AI-generated code against real systems, enforce dependency whitelists, and flag any phantom methods or incompatible mocks.

Developers remain the final authority, reviewing and approving AI outputs before deployment. In practice, this approach balances the speed and productivity benefits of AI with the discipline and oversight needed to maintain secure, reliable software.

AI-generated code is only as good as the guidance and oversight provided by developers. When left unchecked, code can quickly become vulnerable and insecure, and can lead to easily avoidable failures. Developers must continue to stay engaged in the process by reviewing, testing, refining, and optimizing the code generated by AI models. Developers must also be curious and continue to learn about these tools and their limitations.

The responsibility for incorporating AI into software development lies in maintaining the balance of benefits and building safe and reliable software. AI can and should be used as a tool to assist in the development process, but it is not an infallible oracle. By staying engaged and vigilant, developers can make AI a trusted and reliable partner in shaping the future of software development.