Updated August 7, 2025

The rapid surge of AI in the workplace has created an unanticipated and pressing challenge for organizational leaders: while AI tools are being adopted at an accelerating pace, most companies still lack clear, formal policies and guidelines to govern their use.

Employees are increasingly turning to generative AI, machine learning algorithms, and automation platforms to streamline tasks, boost productivity, and solve complex problems. Yet in many organizations, there is little to no guidance about when AI tools should be used, how they should be used, or which tools are approved and secure.

According to a Clutch survey of 250 full-time employees, 78% of non-AI users don't know their employer’s official position on the use of AI in the workplace.

Looking for a Artificial Intelligence agency?

Compare our list of top Artificial Intelligence companies near you

This policy vacuum introduces significant risks. Without defined guardrails, teams may operate in silos, making independent decisions about AI adoption that conflict with broader business strategies or ethical considerations. The consequences can be far-reaching, including:

To harness AI’s full potential while mitigating its risks, organizations must urgently prioritize developing clear, adaptive AI governance frameworks.

These policies should align with business goals, ethical standards, and regulatory requirements, and they must be regularly updated to reflect the fast-changing AI landscape. Doing so will not only ensure responsible usage, but also foster a culture of innovation, trust, and competitive readiness.

While those who don't know their company's AI policy are less likely to implement AI into their daily workflows, 84% of workers at companies that encourage the use of AI also report trusting the technology's outputs. This indicates that leadership sets the tone for how their team views AI in the workplace, in addition to how it should be implemented.

But trust in AI isn't always enough to drive adoption.

The fact that many workers at AI-positive companies trust the technology does not necessarily mean everyone is using it. Employees may not use AI if they have not received training, don't know how it fits into their job, or because they’re resistant to change.

Building trust is a good start, but teams need to provide additional support, like training and education, to have a larger impact on the company as a whole.

When team leaders actively discuss AI and its potential applications, employees feel more comfortable using AI at work. This highlights the importance of company-wide policies when adopting new technologies. Clear expectations and structure around AI use help employees feel more comfortable experimenting with the technology and understanding its boundaries.

On the other hand, when leaders are silent, it creates uncertainty. While some employees become overly cautious, others may place too much faith in automation. For this reason, consistent and transparent communication from the top matters.

Without clear communication, adopting AI technology can cause fragmentation. The sales team may adopt AI-powered CRM tools, but the marketing team may not be interested in AI for content optimization. The product development team may use AI to speed up their prototyping process, but the quality assurance team is wary of automated testing.

Teams can even be internally divided, with some members embracing AI tools to increase efficiency while others hold back, concerned about being made redundant or not trusting a machine's accuracy over a human's.

This sort of misalignment can lead to slower projects and a weaker competitive position.

Most companies aren’t keeping up with the training that's needed to use AI effectively. Only 33% of employees have received formal training, and nearly half (45%) of employees are not aware of their company’s AI policies. This hinders productivity and weakens an organization's ability to compete.

Without effective training, companies risk:

A strong AI training program that develops both technical knowledge and critical thinking skills can fill in these gaps. “[T]raining is essential. These tools are powerful, but without thoughtful instruction, they can feel either overwhelming or underwhelming,” says Penny Moore, Founder and Partner of Tenderling Design. Through employee training, companies can maximize the impact of AI and prevent the hiccups associated with an AI-driven digital transformation.

Leading companies provide hands-on workshops, bias and ethics training, mentorship programs, and continuous updates to keep teams knowledgeable and effective. Ongoing and company-wide training will also prevent knowledge silos and help smooth the path for collaboration across departments.

“The more people understand how to prompt well, review critically, and integrate outputs into their creative or strategic flow, the more AI becomes a true asset, not just a novelty,” says Moore. AI is continuously growing, and training is not a one-time event. Employees need regular and updated education to stay informed about AI developments.

In the absence of an AI policy, people will still use AI tools. It can lead to “shadow AI,” or the unregulated and hidden use of AI. This approach brings several risks, including:

No policy means no guidance for how employees can use AI ethically or legally. This could involve privacy regulations such as GDPR (General Data Protection Regulation) and CCPA (California Consumer Privacy Act). Employees may accidentally deploy AI in ways that expose personal data or break laws.

Legal implications are not the only concern regarding a lack of oversight in AI use. A cautionary tale from 2023 is when Samsung employees used ChatGPT without permission and accidentally leaked proprietary code. The gravity of the leak is undisclosed, but it tarnished the company's reputation. In a state of panic, Samsung leaders banned employees from using any generative AI tools.

Without official sanction, AI tools will proliferate and become shadow AI. One team will use an unapproved content generator, and another department will sign up for a predictive analytics vendor. The more inconsistent the deployment and oversight, the bigger the security risks and data silos. Efficiency and productivity will also decline as ad-hoc AI leads to fractured user experiences. Departments that are allowed to use AI will rapidly advance, while those prohibited will fall behind.

AI frustrations also build among employees. If people don't know which tools are approved, they will either fear experimentation or try to find a loophole around restrictions. This inhibits performance and innovation. Ownership and liability issues may arise if no one is responsible for AI-generated content. Creatives and developers, for example, could face legal consequences.

AI governance is the opposite of this scenario, with a balance of user support and necessary oversight. Clear policies lead to stronger risk mitigation and allow AI to be adopted faster for work. This translates to better performance and job satisfaction. Clear AI policy isn't just nice to have anymore; it's a necessity.

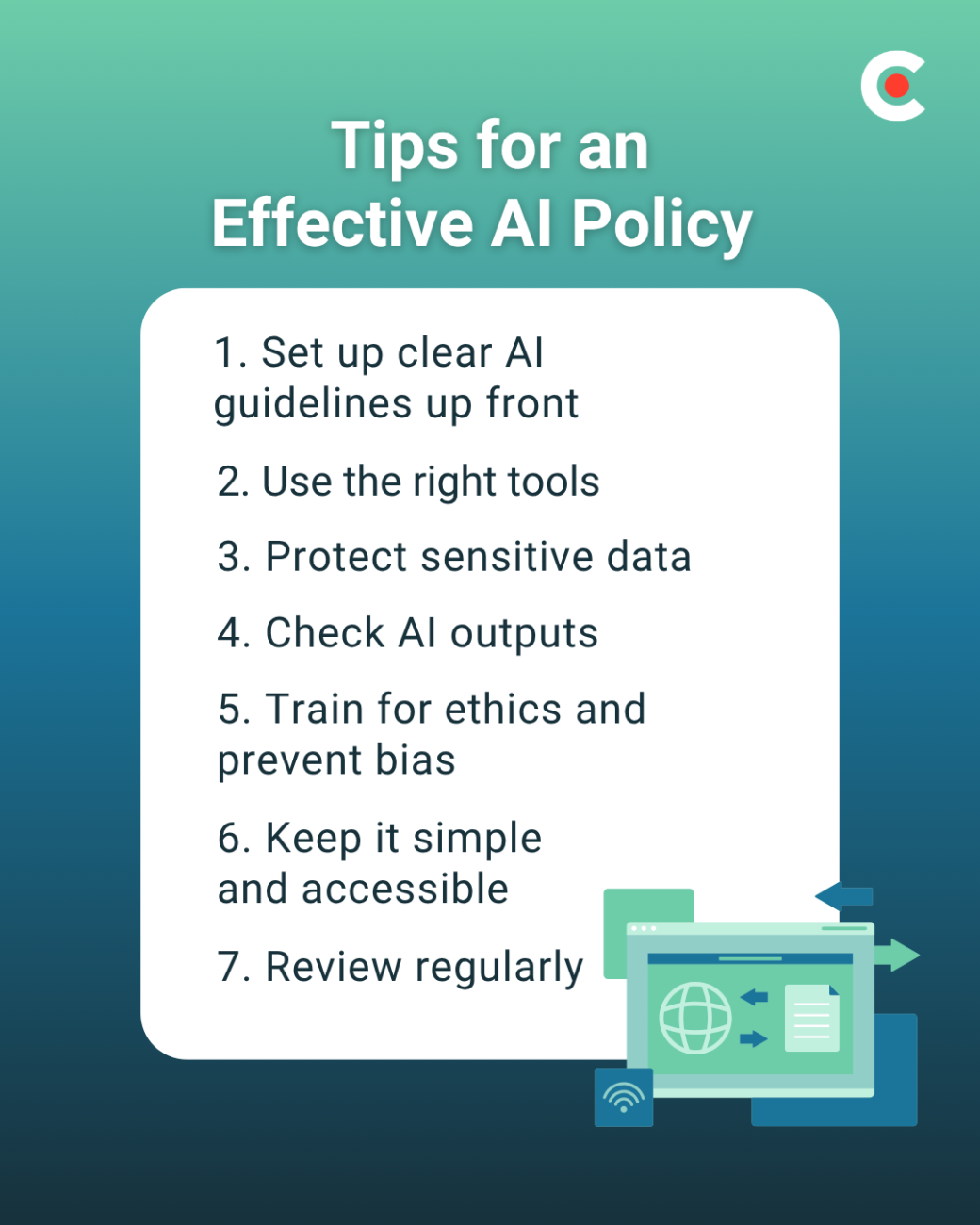

Company AI guidelines should establish smart, adaptable policies that guide teams in using AI safely and effectively. The best AI governance frameworks are also balanced. They give teams enough flexibility to experiment without exposing the business to legal, security, operational, and reputational risks.

Define where staff can use AI throughout the organization. Consider whether the firm should adopt it on an enterprise-wide level or in specific functions only. Determine whether departments have leeway to use the tools they want or if they need an IT sign-off.

Microsoft’s Responsible AI practices, for example, only permit the use of AI in certain use cases, such as customer service and content generation, and only after human review of legal and financial documents.

Put together an approved AI tools list and annotate what they’re best for. Vet them through IT security, and provide a process for teams to request new tools on a need basis. This preempts unauthorized shadow AI use cases and team-by-team ad hoc processes.

Specifically state which types of data are acceptable for AI use and the reasons why. For example, customer data, financial information, legal documents, and proprietary research are typically off-limits, or only with tools that are verified to meet security and compliance standards. Give concrete examples so employees know what this looks like in the context of their work.

AI tools aren’t always accurate. Establish policies for when human review of AI outputs is necessary.

This is especially true for customer-facing content, financial data, internal documentation, or anything subject to regulatory compliance. Include best practices for fact-checking and spotting bias.

AI can be biased in its programming or the data it uses. Train employees to recognize and mitigate biases in AI outputs, particularly for sensitive decisions like hiring or product design. Require developers to test AI models with diverse and representative data. Run regular bias audits and testing.

AI policies and training should be included in the onboarding process from day one, along with other IT and security policies. Tailor the training based on the individual and their role.

“Our approach is to make AI accessible to everyone, but with guidance tailored to how they actually work,” explains Moore. “Designers, strategists, writers—we all benefit differently. So instead of a one-size-fits-all training, we focus on real examples relevant to their roles, paired with open conversations about ethics, responsibility, and creativity.”

This tailored approach helps employees look for ways tom implement AI into their specific workflows. It also helps individuals prepare for a changing workforce that relies on AI more and more frequently. “We want people to feel confident and empowered, not just technically capable,” says Moore.

Keep it fresh with updated training. Rotate between policy changes, guest speakers, workshops, and hands-on demos.

AI policies shouldn’t be long, legalistic, and incomprehensible. Write in clear, specific language that incorporates real-life examples and an FAQ section. Provide a way for employees to submit questions or feedback on the policies, anonymously if they prefer.

AI technology and use cases are evolving rapidly — your policies should too.

Review them every few months, solicit feedback from teams across the company, and adjust based on new tools, regulations, or business needs.

When people understand what is and isn't allowed, they will feel more comfortable using AI. It can sharpen capabilities that improve work outcomes and advance the business, all while staying within a company’s internal policies and customer expectations. These advantages will grow over time as AI becomes increasingly woven into everyday work.

Organizations can’t afford to wait to establish these policies, because the absence of them means employees either won’t use tools that could be helpful or will use them in risky ways. We’re no longer at a point of whether an organization needs AI governance. It’s now about what speed it can define policies for safe and effective use across the enterprise.

Reactive management of AI is no longer an option for companies that want to remain on top of this growing technology. Forward-thinking governance will be one of the key success factors that differentiates business leaders from the competition. Governing AI proactively means establishing policies and processes for the safe and effective use of the technology across the entire organization. It requires an in-depth understanding of how and where employees are deploying AI in the company and staying current on industry standards and regulations.

Artificial intelligence is changing the way businesses operate, and its influence is only growing. It would be incautious to ignore its potential, but it would be equally unwise to blindly adopt AI without considering the associated risks and challenges.

If your company doesn’t have an AI policy, your team is already feeling the impact — whether you realize it or not.