Updated February 19, 2026

Most managers treat AI integration like traditional software development – with sprints, story points, and predictable deadlines. This fails because ML and AI are more R&D than construction. Simple analytics can be estimated confidently, but complex ML and AI require experimentation where outcomes are uncertain.

This mismatch in management approach is why 87% of data science projects never reach production. The fix: Match your methodology to the work. Use timeboxed exploration, treat failed experiments as progress, and build decision gates instead of demanding guaranteed outcomes. Success requires making peace with uncertainty.

Most engineering managers treat machine learning projects like feature development. They assign story points, commit to sprint deadlines, and expect predictable velocity. Then they wonder why their data science teams keep missing estimates.

Looking for a Artificial Intelligence agency?

Compare our list of top Artificial Intelligence companies near you

The problem isn't the team. The problem is treating research like construction.

In software engineering, building a login button is straightforward. You know exactly how to do it. The task takes X hours. You can estimate it and commit to a deadline with confidence.

In data science, "improving accuracy by 10%" follows different rules entirely.

Consider a recommendation system. Your team spends weeks collecting and cleaning input data, integrating new user-behavior signals, retraining on fresh data, and tuning the ranking logic. You run the experiment, but conversion drops.

What happened is that the new signals introduced noise. User preferences shifted during the experiment window. The A/B test infrastructure had a bug. Or, there wasn't enough signal in the first place.

Discovering what doesn't improve your model is, in fact, valuable information. It’s the scientific method at work. But it doesn't fit neatly into a sprint retrospective where velocity is the metric that matters.

AI Project Management is emerging as a distinct discipline, much like AI Quality Assurance carved its own space in recent years. The Project Management Institute (PMI) now recognizes that data science and AI initiatives require fundamentally different approaches than traditional software development.

For organizations investing in AI, understanding these differences is no longer an option. After all, managing risk and setting realistic expectations depend on it.

The challenge, however, runs deeper than mere timeline adjustments. AI projects operate under different laws of predictability, while software engineering has decades of established patterns. AI work often ventures into territory where the path forward only becomes clear through experimentation.

A consistent finding is now appearing across AI projects: the more you can explain why a model works, the more confidently you can predict whether improvements are achievable.

Linear regression for sales forecasting is fully transparent. You can see exactly which factors drive predictions and by how much. Improving it follows a methodical process: add a relevant variable, measure the impact, iterate.

An LLM-based agent coordinating multiple API calls, external data sources, and dynamic decision trees presents a different challenge entirely. Even the engineers who built it struggle to explain why it selected one path over another.

Black box complexity is only part of the equation. Dependencies compound the challenge. Data quality fluctuates. Upstream systems change. External APIs update without warning. User behavior patterns shift over time. The more moving parts you have, the more variables sit outside your control, and the harder it becomes to guarantee any specific outcome.

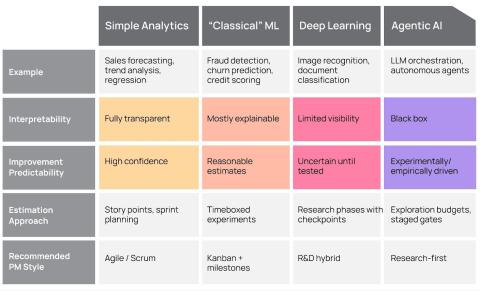

Think of it as a spectrum:

This spectrum matters for business planning. A project on the left side can follow traditional software methodologies with minor adjustments. A project on the right side requires treating development as research with all the uncertainty that entails.

Keep in mind that this spectrum describes technical predictability, not project risk as a whole. A project can sit on the "simple analytics" end and still fail if the business objectives were misunderstood from the start. Frameworks like CRISP-DM place business understanding as the very first phase for exactly this reason — technical predictability tells you how to manage the work, but business alignment tells you whether the work is worth managing at all.

When evaluating AI initiatives, ask what approach the team will take to find out what's achievable, not whether they can guarantee X% improvement.

Simple analytics allows for confident estimates. The math is well-understood, variables are known, and teams can commit to specific outcomes.

But ML systems require a different conversation. Honest teams propose structured experiments: "We'll test these approaches over six weeks and share what we learn." They outline hypotheses, define success metrics, and build in decision points.

Agentic AI puts you in pure research territory unless you're dealing with simple tasks with no edge cases – clear if-else scenarios. Experimentation budgets and staged checkpoints matter more than delivery commitments.

Be skeptical when a vendor promises 10% improvement in two weeks for a complex ML system.

Such promises typically signal corner-cutting, task underestimation, or a fundamental misunderstanding of the problem. Overcommitment at the start leads to disappointment – or a model that technically "meets the number" but fails in production.

According to VentureBeat, 87% of data science projects never make it to production. Unrealistic expectations set during the planning phase largely contribute to this failure rate. Teams promise outcomes they can't guarantee, then struggle to explain why experiments that should have worked didn't.

Drawing from methodologies like CRISP-DM (Cross-Industry Standard Process for Data Mining) and hybrid R&D approaches, several practices separate successful AI initiatives from those that stall:

Match methodology to solution type. Data infrastructure — ETL pipelines, orchestration, monitoring — thrives under Agile. This is engineering work with known patterns and predictable outcomes. But data preparation for ML – feature engineering, signal discovery, and cleaning decisions that affect model behavior – sits closer to the research side. Forcing model development into two-week sprints with committed deliverables creates pressure to show progress rather than discover truth.

Reframe "negative results" as progress. Eliminating dead ends narrows the solution space. A team that discovers three approaches that don't work has made real progress toward finding one that does. Traditional project management treats this as scope creep or missed milestones. Research-oriented management recognizes it as the process of working correctly.

This isn't just a cultural mindset: it's structurally built into proven methodologies. CRISP-DM, the most widely used framework for data mining projects, is explicitly designed for backward movement between phases. A model underperforms, and the team loops back to data understanding or data preparation with new knowledge. The arrows in the process go both directions by design, not as an exception. The methodology treats iteration as the expected path, not a deviation from the plan.

Use timeboxed exploration. Instead of "deliver X by Friday," try "explore approach Y for two weeks, then assess." This gives teams permission to investigate thoroughly without the anxiety of committing to outcomes that may be uncertain. The deliverable is knowledge, not a finished feature.

Design proof-of-concept gates. Build explicit decision points where teams review findings and decide: continue, pivot, or stop. These gates replace sprint retrospectives focused on velocity with research reviews focused on learning. What did we discover? What does that tell us about what to try next? Should we keep going or cut our losses?

The Agile Manifesto's principle of "responding to change over following a plan" applies doubly to AI work. But responding to change requires acknowledging that change will happen, and building project structures that accommodate discovery rather than punishing it.

Rigid sprint commitments for research-heavy AI work create inverse incentives. Teams under pressure to close tickets will choose safe, incremental changes over ambitious experiments that might yield breakthroughs.

A data scientist with three days until the sprint closes won't try the risky preprocessing technique that could improve accuracy by 15% but might not work at all. They'll implement the conservative feature they know will provide a 2% bump.

Over time, this adds up. Projects hit their sprint commitments consistently but never achieve the step-change improvements that justify the AI investment in the first place.

Optimizing for velocity often means sacrificing innovation, unfortunately.

According to KPMG's Global Tech Report 2024, only 31% of businesses have successfully scaled AI to production. Part of this stems from project management approaches that suppress the experimentation needed to move from proof of concept to production impact.

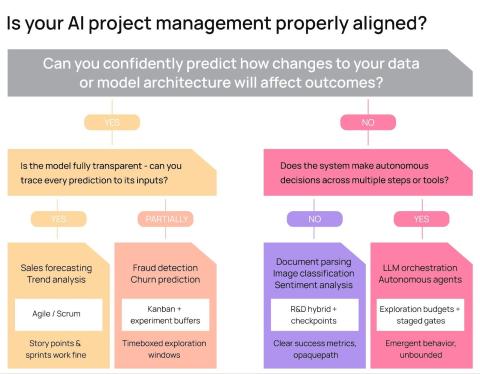

Use the flowchart below as guidance on where your project lies on the agile experimentation/research spectrum.

Is your AI project management properly aligned?

The most successful AI initiatives share a common thread: leadership that understands where their project sits on the spectrum and adjusts expectations accordingly.

They know what they're building. A classical ML fraud detection system needs different oversight than an agentic AI customer service bot. The fraud detection project can have milestone commitments. The agentic AI project needs research phases with go/no-go decisions.

They chose the right approach. If the work is 80% engineering and 20% research, treat it like an engineering project with research buffers. If it's 80% research, stop pretending sprints will work.

They give teams room to discover what's possible. This means accepting that some experiments will fail, estimated timelines might be wrong, or the final solution might look different than what was sketched in the initial proposal.

Machine learning isn't software engineering with extra math. Treating it that way leads to frustrated teams, missed deadlines, and AI initiatives that never make it past the proof-of-concept stage.

The organizations succeeding with AI have made peace with uncertainty. They've built project management practices that acknowledge the difference between building what you know how to build and discovering what's possible to build.

They understand that research-heavy AI work needs research-oriented management. Not because their teams are less disciplined or less capable, but because the work itself operates under different rules.

Know where your project sits on the interpretability-predictability spectrum. Match your management approach to the reality of the work. Give your teams the structure they need to experiment without the pressure to pretend they know answers they haven't discovered yet.

Adjust your project management to fit the nature of AI work, or watch your AI initiatives join the 87% that never make it to production.