Updated February 14, 2026

AI plays a pivotal role in shaping today’s technologies and industries. Yet, as advanced as it is, there’s a big elephant in the room that should be addressed — bias. Andrew Kalyuzhnyy, CEO of 8allocate, discusses bias in AI and what devs need to prioritize to build trust in AI.

With the rise of generative artificial intelligence (AI), business is becoming more and more automated. In fact, the AI industry is expected to see an annual growth rate of 36.6% from 2023 to 2030, demonstrating how integral AI has become in our day-to-day lives. Businesses have increasingly invested in developing their own algorithms to automate various tasks such as customer service interactions, data entry, financial analysis, content creation, and more.

Despite widespread investment in AI, it isn't flawless. Unfortunately, bias is very common: a 2022 study conducted by USC researchers found bias in 38.6% of ‘facts’ used by AI.

Looking for a Artificial Intelligence agency?

Compare our list of top Artificial Intelligence companies near you

AI bias is a phenomenon that gains traction when an algorithm delivers results systematically skewed due to human biases. As sophisticated as AI is, it’s still created by humans. Unconscious and conscious biases can unfortunately be mimicked or even exaggerated in AI models.

Essentially, AI bias can reflect the preferences and prejudices of those developing it, resulting in discriminatory data and distorted algorithms. Over time, AI bias can scale, amplifying the negative effects and further perpetuating human biases.

As businesses increasingly rely on AI, addressing bias is paramount. “When developing AI algorithms, developers must keep three crucial ethical factors in mind: selecting diverse, representative data, being able to detect biases ahead of time, and maintaining data privacy throughout the development process,” said Andrew Kalyuzhnyy, the CEO of the software and AI development company 8allocate. “Ethical considerations like these help ensure that AI systems treat different user groups fairly when making decisions, promoting accountability in AI development.”

In this Clutch article, we’re taking a deeper dive into bias in AI — why it occurs, the different types, and real-world examples.

Avoid creating a biased AI solution by partnering with a top-notch developer. Browse through Clutch’s shortlist for the leading AI development companies.

There are several causes of bias in AI – the most common being algorithmic bias. If the data used to train an AI model is biased, the output generated by the algorithm will also reflect that bias.

That's why Amazon had to stop using an AI hiring algorithm, which favored applicants who used ''male-dominated'' action words, like ''captured'' and ''executed,'' on their résumés. The tool wasn't programmed to do this. However, since it was likely trained on résumés from male applicants, it learned to associate such words with successful hires. The root cause of AI bias usually lies in the datasets used for training. These datasets can create a skewed worldview if they are not diverse and representative enough.

Cognitive bias, another type of AI bias, can also seep through if the developers themselves are biased. For example, if a team of developers is predominantly white, male, and from a specific socioeconomic background, their unconscious biases can influence the development of the AI algorithm. Even if they are not intentionally trying to discriminate, their experiences and perspectives can shape how the AI model learns and makes decisions.

Exclusion bias may also come into play here. This occurs when developers exclude certain data during training because they believe it is irrelevant. For example, a project management outsourcing partner may not include data from female project managers in their AI algorithm because their sample size is small or they believe gender does not play a role in project management.

The result could be an AI model biased against female project managers, even though the data does not support this bias.

Artificial intelligence has proven that it can revolutionize industries and aspects of human life. However, with the threat of AI bias looming overhead, it’s important to understand how it impacts people. By studying these real-world examples, dev teams and business leaders alike can improve your critical perception and take accountability for bias in their own algorithms:

Recent studies have shown that AI models often generate results that are not only inaccurate but also perpetuate harmful stereotypes that can lead to discrimination.

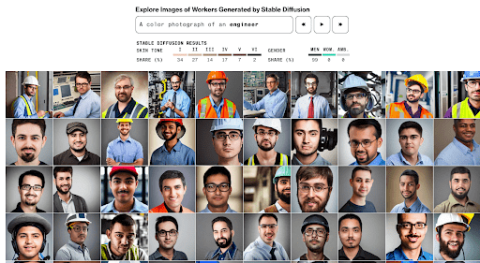

For instance, Bloomberg tested Stable Diffusion’s text-to-image generator to demonstrate how AI models can distort reality based on bias. This study generated over 5,000 AI images that reflect people in different professions.

They found that the AI predominantly generated images depicting doctors, judges, CEOs, and lawyers as white men. In contrast, the image generator presented images of darker-skinned men when asked to generate images of criminals. 80% of the images for the query "inmates" were Black men, even though the Federal Bureau of Prisons data indicates they make up only around 39% of the prison population in the US.

These results indicate that AI models are not immune to human biases, and without proper oversight and checks, they can perpetuate prejudice.

AI bias in the medical field can be extremely harmful as it may directly impact the healthcare needs of people. A groundbreaking study found that algorithms used by countless US hospitals and healthcare centers favored white patients over black patients when assessing the need for extra medical care.

The tool, which is used by over 200 million people in the country, had a faulty metric when it was initially trained. While race wasn’t a variable when it was developed, the variable that was intricately correlated to race was healthcare cost history. There were a multitude of facets — such as income and location — taken into consideration when comparing algorithmic risk scores with health histories but, ultimately, it influenced a bias in the AI model.

The implicit racial bias led to a disparity in the services received by black people. The results of the study helped usher progress, prompting institutions and researchers to help reduce the AI bias of the US healthcare algorithm.

Amazon, the American multinational technology titan, has always been on the forefront of innovation and AI advancement. Unfortunately, as much as it helped transform how businesses automate their processes, it couldn’t escape AI bias.

In the late 2010s, news broke out that the company’s automated recruiting tool — which they had been developing since 2014 — had a skewed bias against female applicants. On paper, the model would help the company streamline their processes, giving job applicants ratings ranging from one to five stars. However, it was later found that the model preferred male applicants, demoting female applicants with gender-determining keywords on their resumes.

The issue sparked concerns on how AI bias will further impact gender discrimination in work spaces. Amazon had to let go of the project, commenting that it was never the only source used by their recruiters when vetting applicants.

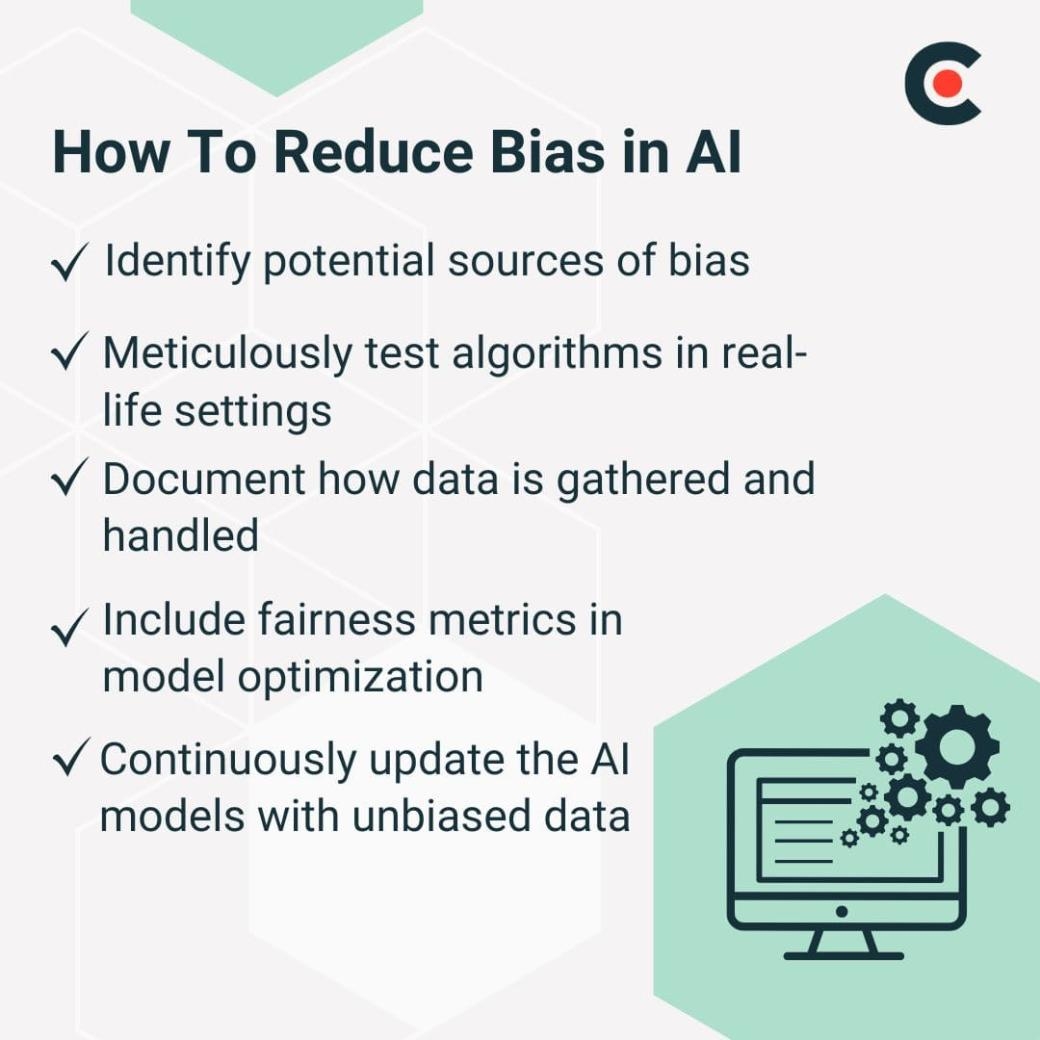

As a society, it’s part of our responsibility to be more aware of how artificial intelligence and its bias work. It’s crucial to make strides into reducing bias to ensure AI leaves a positive impact in our world. Understanding and identifying AI bias is the first step towards combating it.

“To mitigate bias, AI developers should integrate data from diverse datasets, choose learning models that are optimized for fairness, and initiate routine model audits to monitor for potential biases,” Kalyuzhnyy advised. It is essential for developers to incorporate diverse datasets that reflect a wide range of demographics and perspectives, as this can help minimize the unconscious biases that often surface in AI models.

Here are more ways businesses and developers can help reduce AI bias:

Furthermore, developers should prioritize transparency and openness by documenting the decision-making processes and data handling procedures involved in their AI projects, allowing others to scrutinize and learn from them.

By adhering to these practices, the tech industry can pave the way toward a future where AI acts as a fair and impartial tool, enhancing trust and reliability in its applications. Ultimately, those building their own AI models need to be accountable for the bias present in their own algorithm and take the necessary precautions to reduce bias.

In the end, data is the common denominator of all kinds of AI bias. AI learns how to run through the data that it’s trained on, which means it’s up to the humans — researchers and developers — to be hyper critical of the data they gather.

Admittedly, making a non-biased algorithm is a difficult task. Those who have the aptitude have an obligation to regulate their modeling processes by setting good AI governance to adhere to ethical practices and compliance.

Work with a proven AI development partner to ensure you create a bias-free AI model. Connect with the top-tier AI developers on Clutch.