Updated October 30, 2025

Across the board, AI crawlers from OpenAI, Anthropic, Google, and others are indexing at scale. But these cutting-edge crawlers don’t just “visit” a site. They shape how models answer, what gets summarized, and who gets credited. Some businesses see new discovery and referral gains, whereas others see bandwidth bills, IP (Intellectual Property) theft, and brand risk.

So, the question is, which department at an organization decides whether to allow, restrict, monetize, or outright block AI crawlers? Responsibility isn’t always obvious when the stakes affect marketing, legal, engineering, and leadership teams.

Learn more about how businesses are responding to AI crawlers in Clutch's report, "Exposure or Risk? How SMBs See AI Crawlers."

The decision of how to deal with AI crawlers rarely lives in just one department. It cuts across revenue, reputation, and risk, which is why hand-offs create gaps. Here’s how each team feels, and why single-team responsibility can raise various challenges.

SEO and content teams want a presence in AI overviews and chat results without losing the content leverage. The trade-offs are obvious:

This path is intentional for now: Protect premium content, negotiate rights, and decide on what terms content appears in AI systems.

AI crawlers don’t just collect pages — they can extract all embedded data, including content that may fall under privacy regulations like GDPR (EU), CCPA (California), LGPD (Brazil), PDPA (Singapore), etc. That creates serious questions around consent, lawful use, and liability.

For instance, if a site includes user-generated content or personal information, crawlers could inadvertently capture and process data protected under privacy regulations. It could lead to user complaints or regulatory risks. That's why the legal and compliance team needs to protect user and company data, reduce unnecessary collection, and keep records of what was accessed and when.

Terms-of-service violations by AI crawlers add another issue. Many sites restrict training, redistribution, or automated scraping. If a crawler ignores those rules, you have grounds to challenge access. Common reasons to push back include data privacy risk, contract violations, and misuse of brand or IP.

To tackle these, legal and compliance teams can ask a few core questions:

Legal will thus want both “policy signals” (robots.txt-like directives, content-use declarations) and “technical certainty” (WAF rules, verified bot lists, and logs) to support action or negotiation with AI bots.

The dev department often becomes the default owner in many organizations because the immediate decision comes as a matter of code and configurations. The engineering and dev team owns the levers that can be used to permit or block AI crawlers. For instance, they can implement:

Executives consider data an asset. Do you withhold it to protect differentiation, or do you allow controlled access to gain distribution or licensing revenue?

Many websites now block or meter AI bots solely for cost reasons. Business Insider even listed cases where AI crawlers spiked bandwidth bills and degraded site performance. Leaders need to consider the impact of AI bots on their overall return on investment.

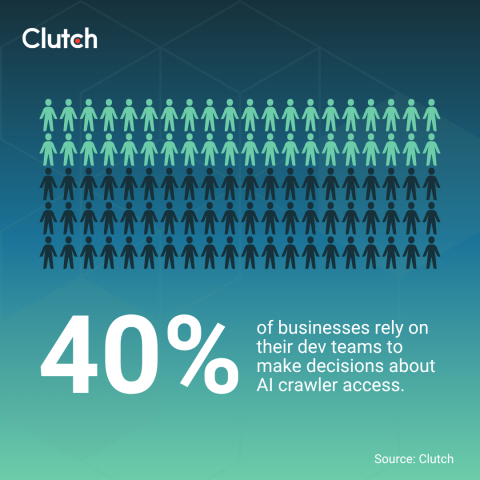

According to Clutch data, 40% of businesses rely on their dev teams to make decisions because they control the tech implementation. These teams often make the initial call while the rest of the organization catches up with policy. That happens because:

However, the risk of such one-sided decisions is that tactical changes occur without a formal stance on what data is open, protected, or paid. These decisions made in isolation without input from marketing, legal, or leadership may also miss the broader brand and legal strategy and open businesses to risk.

Treating AI access as a simple yes/no question underestimates its impact. Visibility in AI summaries and chat answers is already shaping how buyers choose vendors. Over-restrict, and you risk disappearing from AI-first journeys. Over-share, and you risk uncompensated reuse of content that affects business revenue.

Here are some key consequences to weigh:

The best approach for now is to integrate policy, security, and commercial strategy rather than treating robots.txt as the only solution.

Robots.txt is voluntary, and some AI bots ignore it. Your policy needs teeth from technical controls like verified bot lists, WAF rules, and rate caps, while marketing and product teams should decide what to open for online traffic reach and what to protect or license.

This mix helps you block bad actors, enable trusted partners, and test paid access without losing discoverability.

The AI crawler issue affects all teams, including legal, marketing, engineering, and leadership. So a cross-functional team approach can be the best path for handling AI crawler access.

The first step is to form a small group with members from each team who meet on a set schedule, own the policy, and link business goals to technical controls. They can start with an AI Crawler Access Policy that classifies content as open, protected, or negotiable and ties each choice to business outcomes, such as reach, cost, and risk.

Then, turn that policy into clear technical and ethical guidelines that say which bots may crawl and how you will set rate limits and monitor traffic. Also, spell out when to return 403, 429, or 402 pages to control bots. Add steps to detect impersonation and guide escalation, too. This setup lets you adjust quickly without ad-hoc fixes.

Consider implementing a variation of this example ownership model:

When this structure runs well, a company can change posture quickly. For example, you could decide to block a new crawler reported as ignoring robots.txt, or open access for a partner under license without re-litigating policies each time.

Managing AI crawler access is no longer just a technical task but a key strategic business decision. Treat it like any other revenue-risk policy with shared ownership, monthly data reviews, and clear escalation.

Whether you allow, charge, or block AI crawlers, the point is to act intentionally. Protect what’s proprietary, grow where visibility pays, and put enforcement behind your policy. As AI reshapes discovery and data use, move from "reactive blocking" to "intentional governance" that all your departments can stand behind.