Updated August 21, 2025

New data reveals that most employees use AI regularly, but most of them haven't been trained. As a result, many are learning to use AI on their own, and with it, are putting company data and security at risk.

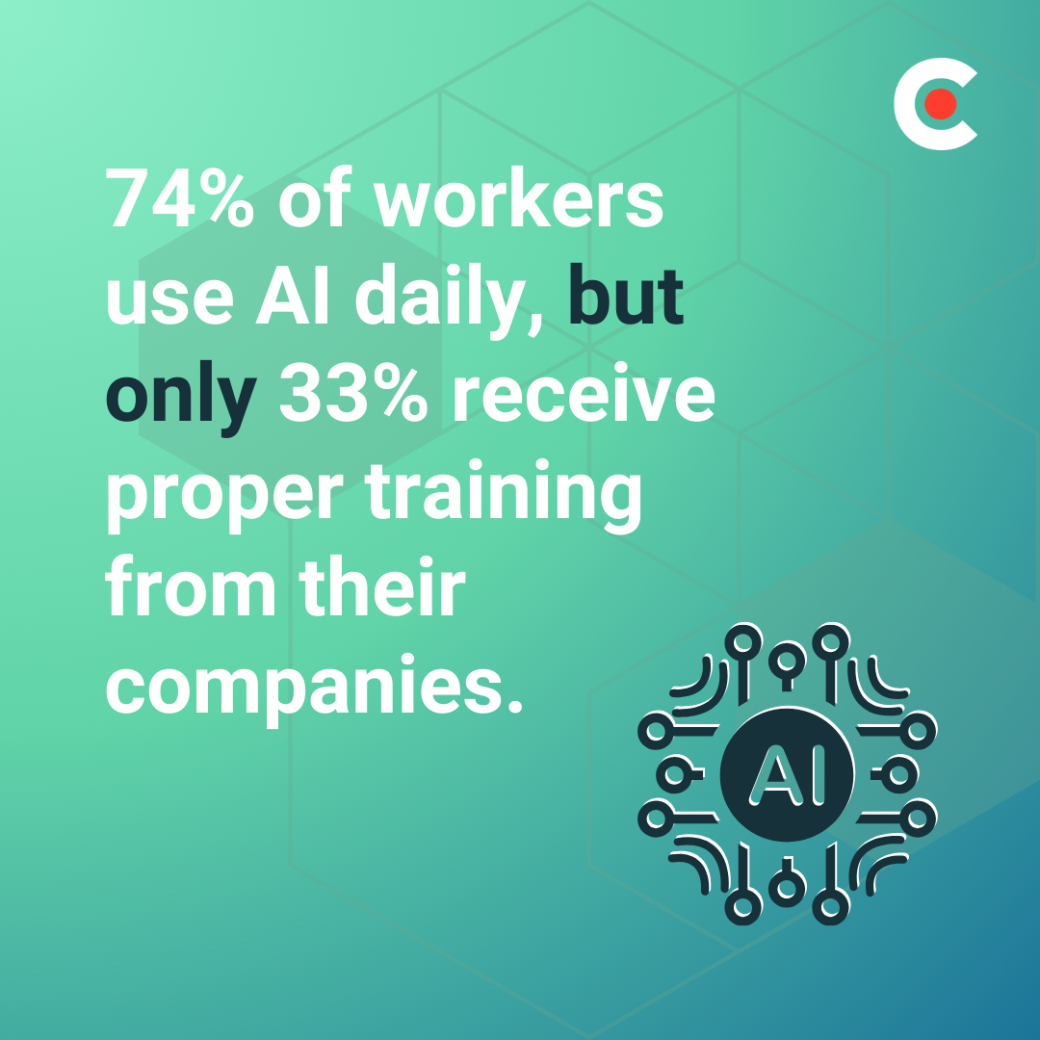

Your employees are teaching themselves AI, and it may be impacting your business more than you think. In a Clutch survey of 250 full-time workers, 74% of workers use AI daily, but only 33% receive proper training from their companies.

This self-taught approach, in the absence of corporate AI training, might seem harmless. In reality, this could lead to potential security breaches, compliance nightmares, and wasted investments.

Looking for a Artificial Intelligence agency?

Compare our list of top Artificial Intelligence companies near you

In this article, you'll discover how DIY AI learning could be secretly undermining your company's performance, and exactly what you can do about it.

Walk through most offices today, and you'll spot the signs: developers debugging code with AI assistants, finance professionals building automated reports, and marketers using content generators to create social media posts. Everyone's using AI, but only a third has been trained on how to use it responsibly.

“[AI] training is essential,” says Penny Moore, a Partner at Tenderling Design. “These tools are powerful, but without thoughtful instruction, they can feel either overwhelming or underwhelming.” Proper training can help employees maximize productivity using AI and have a broader impact on the business as a whole.

AI training focuses on teaching employees how to understand and effectively use AI tools in their roles. This can range from basic awareness—like understanding what AI is and how tools like ChatGPT or image generators work—to more advanced skills like data analysis, machine learning, or how to build AI models.

The goal is to help employees become more confident and efficient in using AI, whether it's to automate tasks, make better decisions, or innovate in their work. “The more people understand how to prompt well, review critically, and integrate outputs into their creative or strategic flow, the more AI becomes a true asset, not just a novelty,” explains Moore.

Without standardized corporate AI training, employees develop their own methods — some brilliant, others dangerously flawed. One team might share sensitive customer data with public AI models. Another might spend hours on tasks that proper AI knowledge could solve in minutes. Both put your business at risk, either from violating compliance regulations or from inefficiently using resources.

Beyond basic AI skills, users should have a solid understanding of both the technical and security aspects of AI, such as data protection, system vulnerabilities, and strategies to prevent breaches, all of which are major risks when using AI tools. Proper training can help prevent security violations and data leaks that can negatively impact the business’s performance and brand perception.

Additionally, they need to be mindful of the ethical considerations surrounding AI use, such as privacy, fairness, transparency, and accountability, to minimize bias and safeguard sensitive information responsibly.

When your team opts for DIY AI learning through experimentation rather than structured programs, they're essentially running uncontrolled experiments with your business processes.

Unlike formal business processes backed by clear documentation and standards, DIY AI learning often leads to inconsistent outcomes and hidden risks, many of which only become apparent when something goes wrong. This can include compliance and security issues, misuse of AI tools, and ultimately, impacts the ROI of using these tools.

Without proper training, employees treat AI tools like Google and input whatever they need help with. They don't realize that consumer AI platforms often use their inputs for model training. Your strategic plans, customer lists, and proprietary codes could be training someone else's AI right now.

Consider what happened to Samsung in 2023. Samsung engineers unknowingly shared confidential source codes with ChatGPT, causing a major security breach. The company had to ban AI tools entirely while scrambling to implement proper protocols. That knee-jerk reaction cost them months of productivity gains.

Beyond data misuse, geographic compliance creates additional risks, and data residency laws add another layer of complexity. For example:

These violations occur because employees don't understand where their data goes or how different AI platforms handle information across borders.

You might have read the horror stories — lawyers submitting AI-generated briefs with fake citations and customer service reps letting AI chatbots make promises the company can't keep. These aren't isolated incidents. They're symptoms of a larger problem: employees using powerful tools without understanding their limitations.

AI hallucination rates still vary between 15%-39% depending on the task, but untrained employees may not know this. They trust AI outputs implicitly, treating generated content as facts. This can damage your company's credibility and reputation — especially when inaccurate information makes its way into client reports, marketing materials, or strategic decisions.

Misuse also means missed opportunities. For example:

The result is a double penalty: your company faces both increased risks and decreased productivity from the same AI tools.

Some companies could be spending millions on AI licenses, infrastructure, and tools. But without proper corporate AI training, they're getting pennies on the dollar in return.

Untrained employees typically utilize fewer of the AI capabilities. They might stick to basic tasks such as simple report summaries and basic code completion. Meanwhile, the platform's advanced features for data analysis, workflow automation, and decision support might get underutilized across the organization.

The result can significantly impact your ROI. For instance, your business case projected 40% productivity gains, but in reality, it only delivers 5-6% because employees don't know how to use AI tools effectively.

Cost overruns further compound the problem. Your teams might purchase redundant tools because they don't understand existing capabilities. Disjointed IT systems could also proliferate as departments buy their own AI solutions. Eventually, you end up with a patchwork of platforms, none fully utilized, all draining budgets.

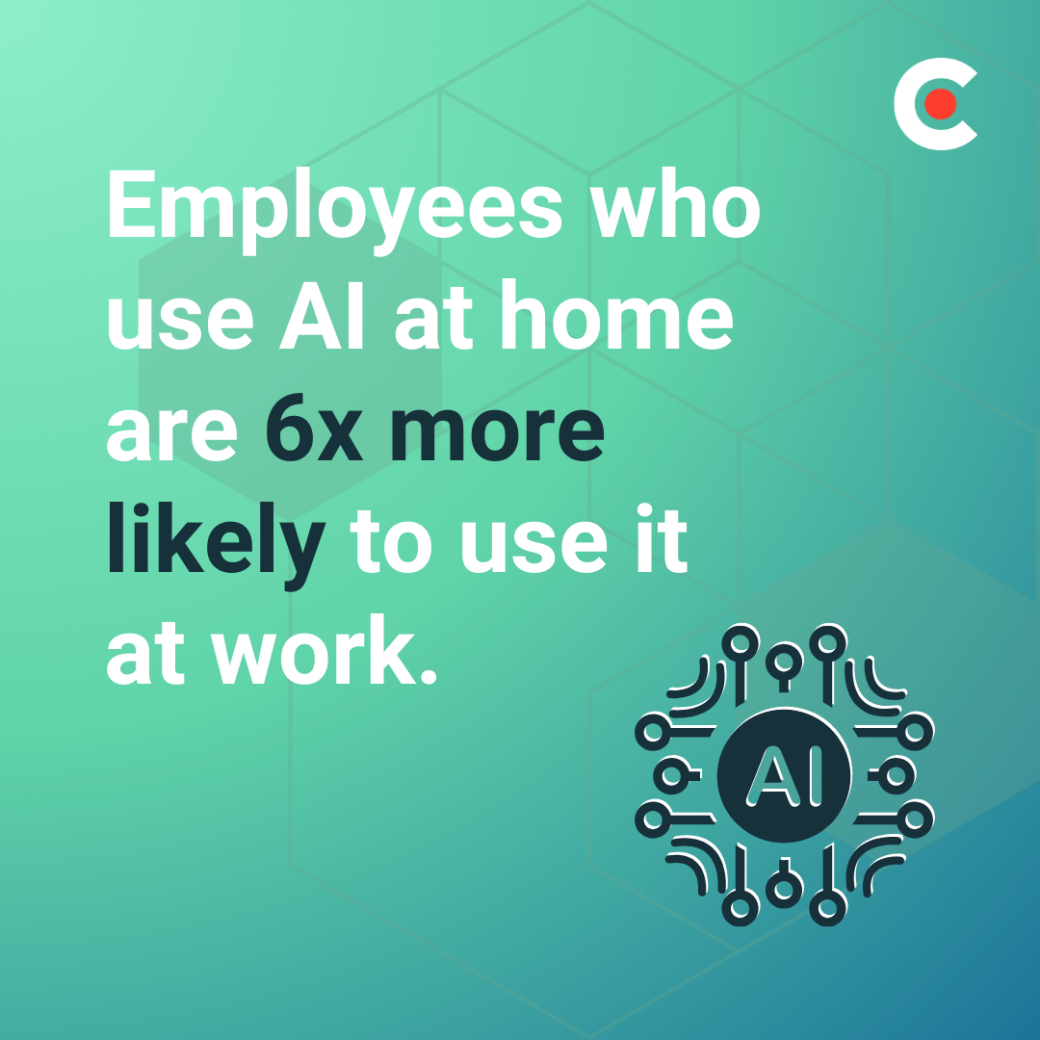

Here's a stat that should reshape your AI strategy: Employees who use AI at home are 6x more likely to use it at work.

Personal AI use creates comfort with the technology. Employees who generate vacation itineraries or help kids with homework using AI can develop an intuitive understanding of prompting, iteration, and output evaluation.

Those who are using ChatGPT and other helpful tools are using them for just about everything. “At home, I use AI for everything from recipe planning to travel research to creative writing with my kids. I’ll use it to summarize books I want to preview, draft thank-you notes or invitations, or even help plan family projects,” explains Moore. “It’s like having a really helpful, non-judgmental assistant in your pocket—and it frees up my mental bandwidth so I can be more present with the people I love.”

These home experimenters bring invaluable skills to your workplace. They understand prompt engineering because they've spent weekends perfecting personal finance reports with AI. They grasp workflow automation from organizing their own lives. They've developed critical evaluation skills by fact-checking AI outputs for their kids' homework help.

The skills transfer seamlessly:

Your most innovative AI users likely started experimenting on their own time. They're your early adopters and informal trainers. But relying on voluntary learning creates winners and losers. Not everyone has time or inclination for weekend AI exploration. Moreover, personal AI use doesn't cover workplace ethics, data security practices, or training for work-specific tools.

Smart organizations recognize this trend and leverage it rather than fighting it.

Your employees' personal AI experiments offer a blueprint for corporate training success. The key? Align your programs with how people actually learn and use AI in real life.

Traditional corporate training fails because it's divorced from real use cases. Employees sit through PowerPoints about "responsible AI use" while itching to solve actual problems. By studying how people naturally learn AI, you can design programs that stick.

Here are a few practical recommendations to implement it:

"We organize regular meet-ups to all company employees, covering AI-related topics about its usefulness, practical applications in various spheres of business, and its value and impact on the world in general," says Chad West, Managing Director at *instinctools. "[W]e do not limit opportunities to learn and grow, so everyone has access to these webinars and can present on any insightful topic on AI."

Create safe spaces where employees can experiment without risk. Think of it as a corporate playground for AI learning.

Microsoft's AI Business School provides a great model. They offer hands-on labs where participants can experiment with AI tools using fictional company data. Executives solve realistic business problems, such as optimizing supply chains and analyzing customer sentiment. Learning happens through doing, not listening.

Also, your sandbox should mirror your actual tech stack.

Generic training creates generic results. Specific training drives specific value.

Not every employee has time for week-long AI bootcamps. Your executives juggle dozens of priorities, and they need learning that fits between meetings.

Break AI education into a few-minute modules, and cover one concept, one tool, one technique at a time. Also, make content available on-demand across devices.

Moreover, focus on immediate applicability. Each module should solve a real problem. For example:

Avoid theoretical frameworks. Your leaders don't need to understand transformer architectures. They need to know which AI tools or functions to use for better results. Save the deep technical content for your IT teams.

Your informal AI champions already exist. They're the employees already evangelizing solutions in the shadows, teaching colleagues during lunch breaks, and sharing tips in private Slack channels.

Give them platforms and recognition. For instance:

Also, incentivize knowledge sharing across your organization. When someone discovers a brilliant AI use case, make it worth their while to document and distribute it.

These champions then train their teams, creating organic adoption that feels authentic rather than mandated.

Your employees will use AI with or without your help. The question is whether they'll use it safely, effectively, and strategically.

Right now, DIY learning dominates AI adoption trends across industries. Workers cobble together knowledge from YouTube tutorials, forum posts, and expensive trial-and-error. This approach might feel innovative, but it's actually holding your organization back.

The hidden costs compound daily in the form of security breaches from untrained users, compliance violations from misunderstood regulations, and wasted investments in underutilized tools.

But you can flip this dynamic starting today. Structured corporate AI training doesn't mean boring workshops or rigid curricula. It means channeling your employees' natural curiosity into productive learning paths.

The organizations winning with AI are those who recognized early that AI learning requires intentional investment and thoughtful structure. The gap between DIY learning and structured training is where competitive advantage lives. It's time to bridge it.

Your next step? Invest in structured AI learning to keep up with innovation, safety, and productivity goals.