Updated June 27, 2025

Developers should deal with the ethical implications of AI by being transparent about their development process, ensuring the minimization of AI bias, and staying accountable for their algorithms. Keeping up with industry research and continuous monitoring helps organizations comply with AI ethics and regulatory guidelines.

AI has become an integral part of most industries, with many companies investing in algorithms and systems to improve their operations and provide cost-effective solutions to clients. AI can be found in almost every business operation, from marketing and advertising to customer service and data analytics.

However, there are many ethical dilemmas to consider when building your own AI model. AI's inherent bias is among the top concerns, with algorithms sometimes perpetuating discrimination based on skewed or misrepresentative data. Privacy and security are also major issues, as AI platforms that handle sensitive customer data can become a prime cyberattack target.

“When developing AI algorithms, developers must keep three crucial ethical factors in mind: selecting diverse, representative data, being able to detect biases ahead of time, and maintaining data privacy throughout the development process,” said Andrew Kalyuzhnyy, the CEO of the software and AI development company 8allocate. “Ethical considerations like these help ensure that AI systems treat different user groups fairly when making decisions, promoting accountability in AI development.”

It's important for business leaders to examine every aspect of AI, acknowledge its benefits, be aware of potential risks, and take precautionary steps to minimize their negative impact. Below, we discuss the need for AI ethics and why responsible practices must accompany outsourced AI services.

Looking for an AI development partner? Search for industry-leading service providers on Clutch. Filter by client ratings, pricing, and location to find the perfect partner for your project.

One of the major concerns regarding AI is bias. AI may be ''intelligent,'' but it's ''artificial." That means the tech's intelligence is limited to its dataset — created by humans. And as we all know, humans aren't perfect. Human biases can skew training data, leading to distorted outputs that can have lasting impacts on your business, users, and the rest of the world.

“Bias often emerges from non-representative or skewed data, leading to issues such as unfair credit scoring or biased hiring algorithms,” Kalyuzhnyy said. That's why it's imperative for outsourcing partners to use diverse datasets and learning models that are optimized for fairness in order to account for bias.

Unfortunately, bias in AI can lead to discrimination, denied opportunities, and the misrepresentation of different groups based on their race, gender, age, and other characteristics. Even though this usually isn't the intention, the organization using the system will be held accountable. These consequences can impact your reputation and result in fines and lawsuits.

Learn more in Clutch’s recent article, ‘Bias in AI: What Devs Need to Know to Build Trust in AI.’

While some argue that it may be impossible to create impartial AI algorithms, you can combat bias in AI by following industry best practices and proactively auditing your AI model.

“To mitigate bias, AI developers should integrate data from diverse datasets, choose learning models that are optimized for fairness, and initiate routine model audits to monitor for potential biases,” Kalyuzhnyy advised. By following these guidelines, developers can significantly reduce the risk of biased AI outputs, ultimately fostering more equitable decision-making processes and more accurate results.

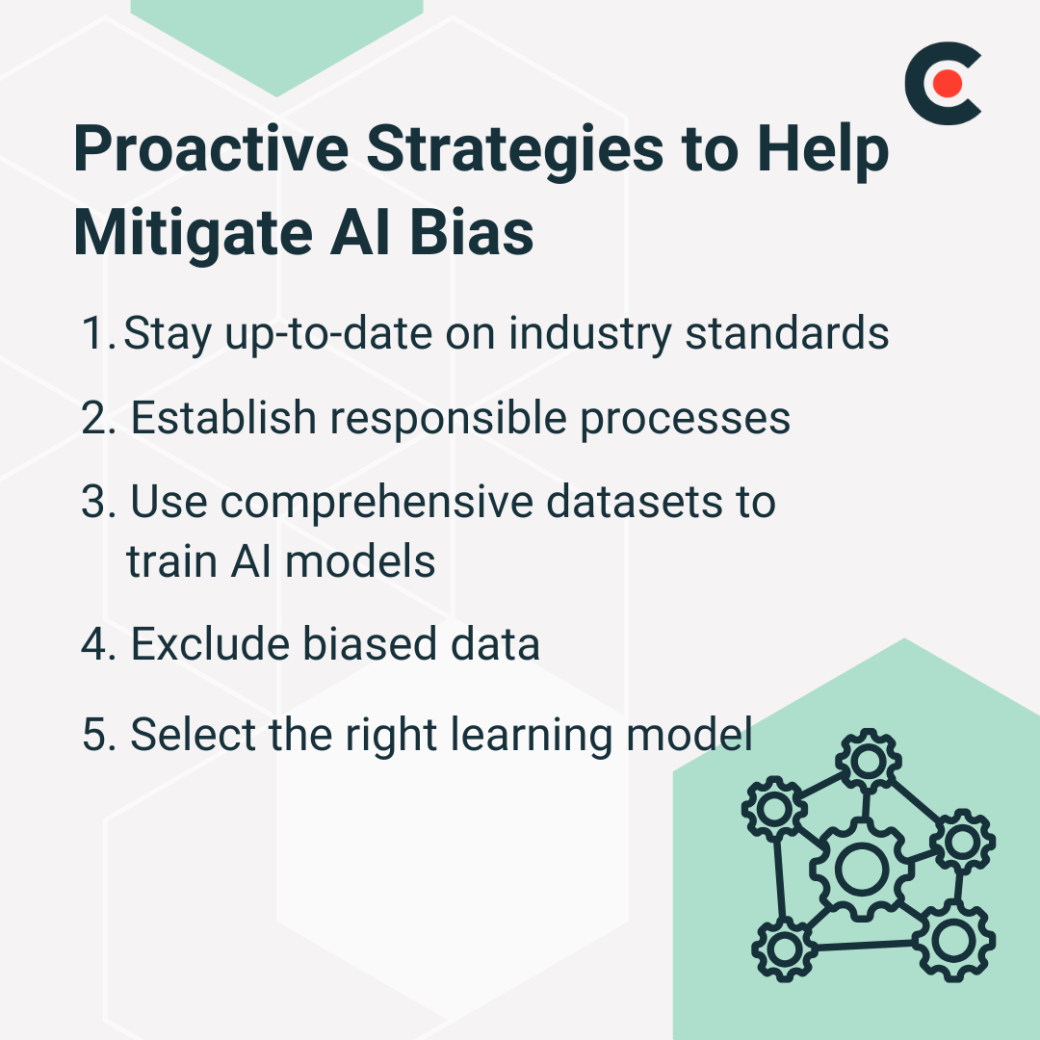

To further mitigate AI bias, organizations and developers can adopt several proactive strategies, such as:

It's important for developers to continuously stay informed of the latest research and best practices for AI developments. While government regulations are still trying to catch up as AI evolves rapidly, standards set by GDPR and the EU AI Act act as ethical frameworks. These frameworks guide privacy, data protection, and transparency, ensuring that AI systems adhere to principles that defend user rights and promote fairness.

Additionally, developers should continue to educate themselves on bias in AI by following industry publications that address these issues. For instance, the AI Now Institute publishes annual reports that you can use to learn about new developments in the AI space and how to mitigate bias. Another resource is Partnerships on AI, a platform that brings diverse communities together to address important AI-related questions.

Developers can proactively address ethical concerns and foster trust with end-users by aligning development practices with these standards.

Incorporating AI ethics into the development process requires a proactive approach. One way to build fairer and more effective AI models is to implement processes like hiring third parties to audit and monitor your AI model before deployment. These audits serve as an impartial tool for identifying potential biases.

In addition, establishing an in-house ethics committee to oversee AI development can provide another layer of scrutiny, ensuring that ethical concerns are addressed throughout the development lifecycle. Regularly updating these protocols in line with new ethical guidelines and advancements in AI technology will help maintain these standards and encourage ongoing accountability.

You can also learn from other tech companies, such as Google. The search engine's Responsible AI Practices document can provide a framework for your team to follow. The United Nations Educational, Scientific and Cultural Organization also has an AI ethics recommendations framework for developers to integrate into their development processes.

A lack of information in the data set used to train AI models is a common source of bias and can lead to skewed and inaccurate results.

“Errors frequently occur because data frameworks fail to represent the full range of scenarios or have biases in examples,” Kalyuzhnyy said. Insufficient data diversity can result in the AI model developing a limited understanding of the world, failing to account for the myriad variables across different demographics.

This is particularly concerning when the data disproportionately represents certain genders, ethnicities, or social groups, causing the AI to miss out on the nuances essential for fair decision-making processes. To mitigate this, developers must proactively curate comprehensive and representative datasets, enabling the AI to produce equitable and unbiased outputs that reflect a more holistic view of reality.

Developers should make every effort to exclude biased data from their training datasets. They can do this by thoroughly reviewing and cleaning their data.

Biased data is information that contains implicit biases. For example, if the dataset used to train an AI model only includes data from one specific demographic, the resulting model will likely be biased. Similarly, some data sources, such as social media platforms, may contain biased information due to the inherent biases of its users.

When cleaning your data, remove identifying information such as gender, race, or age. You should also diversify your data sources to ensure a more representative sample.

It also helps to bring people from underrepresented communities into the development process. With them on board, identifying and removing potential biases can be easier.

A diverse team of stakeholders should select the training data. It shouldn’t just be composed of data scientists and other groups that add diversity to the selection process. Developers should then build bias prevention tools in the neural networks to weed out bias.

A one-time effort isn’t enough, though. Teams should conduct ongoing testing and monitoring with real-world examples and data to ensure no bias creeps later in the development process.

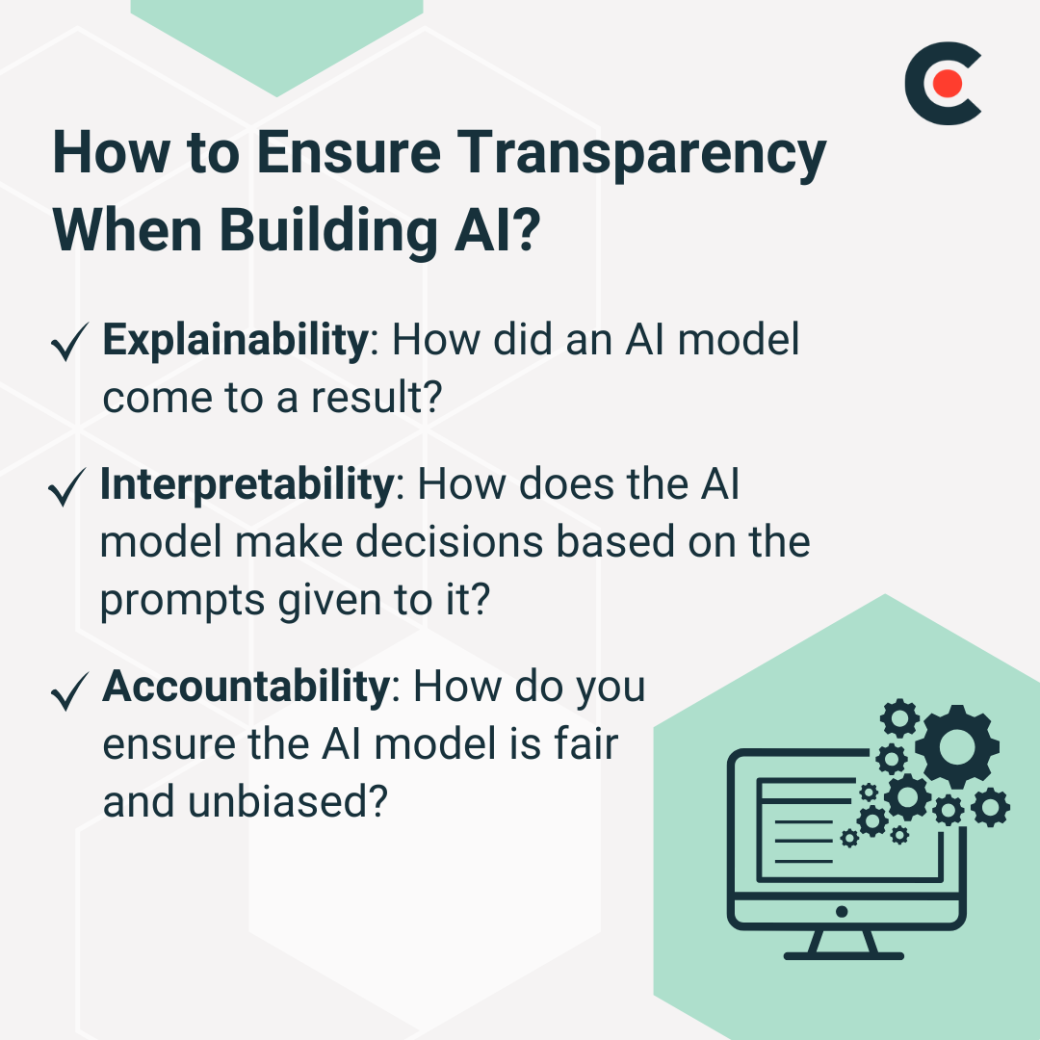

Ideally, AI developers should be able to explain and justify the outputs of their models in a way that is understandable to users and stakeholders.

When it comes to transparency, companies need to disclose their data sources and be able to explain how it impacts the AI model’s output. “Best practices include using explainable algorithms to clarify AI decision-making, providing educational materials, tutorials, and technical support to help users interpret AI outputs, and conducting frequent AI audits with human oversight,” said Kalyuzhnyy.

Your users and stakeholders should understand how the data is selected, stored, and processed. One way to enhance transparency is to document your processes and make it accessible to all stakeholders. The document should include the model name, purpose, risk level, model policy, intended domain, training data, bias, fairness metrics, and explainability metrics. Include testing and validation results so that end users can see how the model performed during development.

Finally, you should provide an option for users to opt out of data collection. This will help build their trust in your offering.

Just like transparency, accountability is an integral part of ethical AI development. In a developer survey, about 50% of coders said that humans who create AI tech are responsible for its ramifications. “AI accountability begins with business leaders' commitment to implement AI fairly and securely,” said Kalyuzhnyy.

End users and regulatory authorities seem to share this sentiment, too. It is one of the Organization for Economic Cooperation and Development principles for value-based AI and is a core component of The AI Research Innovation and Accountability Act.

The responsibility of creating ethical AI models lies on those that build them.

You can demonstrate accountability by allowing third-party audits. Let independent organizations examine your AI model and certify it as trustworthy. You can also implement internal audits to check the fairness and accuracy of your AI tools regularly. Even better, get approved by recognized institutions to show your commitment to ethical AI development.

There's no denying that AI has the potential to streamline tasks in almost every industry. So, it's understandable why B2B outsourcing partners are developing new AI tools or integrating AI features in their solutions.

However, AI integration comes with risks of bias and lack of transparency. You can address these issues by prioritizing ethical AI development and building organizational accountability. You should also keep up with industry developments, new regulations, best practices, and certifications.