Bot traffic on websites is surging, and businesses are taking notice. With the widespread use of AI tools like ChatGPT, Perplexity, and Claude, AI training bots are crawling websites at a massive scale, and it's causing a host of problems for businesses.

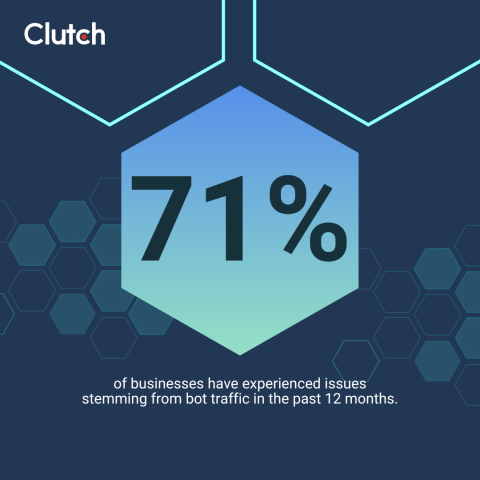

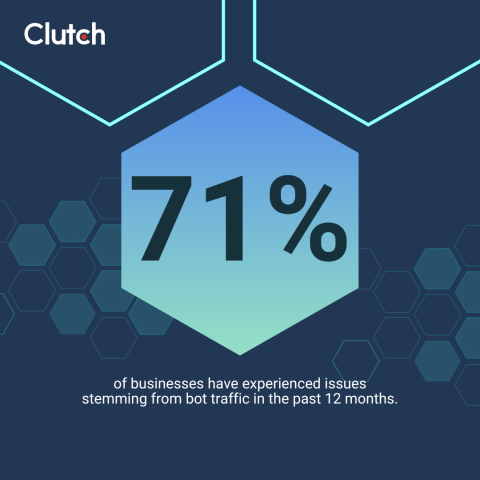

According to new Clutch data, 71% of businesses have experienced issues stemming from bot traffic in the past 12 months. The largest concern is performance and bandwidth strain (42%), but some organizations are also struggling to differentiate between bots and legitimate users.

To effectively measure the impact of AI crawlers, 63% of companies are now actively tracking bot activity to understand and manage this growing challenge. Tracking bots helps in the following ways:

- Distinguishes legitimate users from bots, so product teams don’t optimize for fake behavior

- Provides accurate data in analytics and revenue attribution

- Keeps pages fast by shedding abusive traffic that overloads bandwidth

- Reduces the chances of a cyberattack before it causes major damage

- Grounds operational decisions in clean data, not inflated traffic sessions

To effectively monitor bot traffic on your website, though, you need the right tools. Below, you’ll find insights on seven top bot detection tools and how they can help you spot and handle AI traffic.

Top Bot Detection Tools for Tracking AI Crawlers

AI companies are shifting from slow AI training scrapers to on-demand retrieval bots that fetch and summarize pages in real time. That means traffic arrives in bursts, often with headless fingerprints.

The best detection systems combine behavioral analysis, device/browser fingerprinting, and edge-level controls to catch evasive patterns without deteriorating the user experience (UX) for legitimate users.

1. Radware Bot Manager

Radware Bot Manager is a full-stack bot mitigation platform that protects web, mobile, and APIs. It uses AI-driven cross-correlation, a global attacker feed, and both client- and server-side signals to detect sophisticated AI automation, including large language model (LLM) scrapers.

Key Features

Modern AI crawlers don’t always announce themselves, so you need layered sensing before you ever block a request. Key capabilities of Radware include:

- Device and browser fingerprinting with hundreds of parameters

- Behavioral modeling to flag high-speed, non-human navigation

- AI-based cross-correlation and global attacker intelligence

- Multi-surface coverage across web apps, mobile SDKs, and APIs

Taken together, these controls help operations teams respond with precision instead of blunt allow/block lists.

How It Can Help Track AI Crawlers

Radware’s client sensors and server-side analysis collect a deep fingerprint per session, then cross-correlate anomalies. Paired with a real-time attacker feed, the platform surfaces clusters of suspicious AI agents, even when they spoof user-agents or rotate IPs. That fingerprint depth is useful for distinguishing different bots hitting specific content paths at odd intervals, so you can label, rate-limit, or challenge with minimal impact to humans.

Radware’s Strengths

Radware works well when you need depth and control across teams:

- Enterprise-grade breadth: Radware can be deployed widely, with SDKs and policies for web, mobile, and APIs. It's a strong fit for complex business solutions.

- False-positive discipline: Rich signal sets and correlation reduce needless challenges compared to rigid rule stacks.

- Flexible mitigations: Go beyond blocking with rate caps, interstitials, and adaptive challenges to treat AI crawlers differently than human sessions.

- Omnichannel protection: Unified visibility prevents bots from shifting to the weakest interface.

This combination suits teams that want strong controls without crushing UX.

Radware’s Limitations

- Higher cost: Comprehensive coverage and global scale can price out smaller sites.

- Integration complexity: Getting client tags and API integrations right requires careful rollout.

- Data plumbing friction: Some users report friction in exporting data or automating policy tuning through external systems.

- Evasion risk: Sophisticated bots may still alter fingerprints and behaviors to avoid detection.

For most large organizations, the control you gain outweighs setup complexity.

2. DataDome

DataDome is a cloud bot-management platform known for fast time-to-value and a strong balance between detection accuracy and user experience. It protects web, mobile, and APIs, including controls for LLM scraping and agentic AI.

Key Features

For teams that want speed without sacrificing signal quality, DataDome focuses on:

- Client and server fingerprinting with anomaly checks

- Behavioral detection and real-time risk scoring

- Global point of presence (PoP) footprint for low-latency inspection

- LLM/AI crawler controls for content theft and scraping

That mix gives product and security teams clean dashboards with actionable details.

How It Can Help Track AI Crawlers

DataDome inspects browser and transport layer security fingerprints, request timing, and path patterns to spot AI agents, even when they rotate IPs or spoof user-agents. It’s useful if your priority is low friction while still identifying unauthorized AI bots.

DataDome's Strengths

- Accuracy without friction: Aims for low false positives while keeping pages fast and challenges rare

- API-first coverage: Treats API traffic as a first-class surface, not an afterthought

- Global scale, low latency: Helps keep overhead down during inspection with 30+ PoPs

- Rapid rollout: Connects quickly through CDN/WAF or agent-lite options

These features are good for teams that want rapid protection without heavy tuning.

DataDome's Limitations

- Pricing skews to volume: Smaller sites may find the cost curve less attractive.

- Evasion exists: A UC Davis study found evasive bots altered fingerprints to bypass anti-bot services. Their honey-site tests measured a 52.93% evasion rate against DataDome.

- Customization friction: Deeper customization might need vendor support for edge cases.

Use DataDome when speed and low UX impact matter more than deep in-house tinkering.

3. Cloudflare AI Crawl Control

Cloudflare's embedded AI crawler implements policy and monetization at the CDN edge. It lets you block or even charge AI agents via standardized responses (including HTTP 402 workflows) and central policy, with crawler verification and shared intelligence across a massive network.

Key Features

For organizations already on Cloudflare, it gives teams centralized ways to handle AI crawlers:

- Default blocking of known AI crawlers (opt-in/opt-out)

- Pay-per-crawl to set terms and pricing with 402 flows

- Crawler verification and identification at the edge

- Simple rules to allow, challenge, or meter traffic

These options put policy and economics upstream — before bots touch your origin.

How It Can Help Track AI Crawlers

Because controls sit at the edge, you get telemetry and enforcement before requests reach your infrastructure. For example, you can allow search indexers, block unlicensed model trainers, and charge retrieval bots that comply with your terms. Those 402-based flows create a negotiation channel rather than an "apply to all" blocklist.

AI Crawl Control's Strengths

- Upstream filtering reduces origin load: Bots get handled at the CDN layer.

- Easy integration for Cloudflare customers: Policies live alongside WAF and caching.

- Network effects: Benefit from global scale and shared crawler intel.

- Flexible rules and monetization: Align access with licensing, not just blocks.

For teams already using the Cloudflare ecosystem, this might be an easier way to put a gate in front of AI bots.

AI Crawl Control's Limitations

- Less specialized than pure bot platforms: Cloudflare's solutions are great at policy and edge enforcement, but not as a full behavioral analytics suite.

- Can suffer from false positives: Heuristic misfires can happen if rules are broad.

- Ecosystem lock-in: Deepest controls require you to be a part of the Cloudflare ecosystem.

Even with those trade-offs, Cloudflare AI Crawl Control is compelling for immediate coverage and licensing leverage.

4. Akamai Bot Manager

Akamai offers bot detection tightly integrated with its global edge. It emphasizes AI-assisted behavior analysis, fingerprinting, and stealthy defenses that don’t tip off attackers, with specific guidance for LLM scrapers.

Key Features

- Advanced detection (behavior and fingerprints)

- Edge-first enforcement to cut origin cost

- Good-bot versus bad-bot policy to avoid collateral damage

- Integration with WAF/DDoS/caching for one control plane

These integrations make day-to-day operations simpler for cybersecurity teams.

How It Can Help Track AI Crawlers

Akamai’s scale helps spot coordinated crawling patterns across customers. For AI agents that don’t honor robots.txt rules, Bot Manager can interrogate the client early and shape responses for their intended purpose. For example, you can let indexers through while gating AI training or retrieval.

Strengths

Operational advantages you can expect:

- Early edge detection: Edge mitigation/offload reduces origin load and saves compute cost.

- Good versus bad bot differentiation: This capability reduces the chances of collateral damage.

- Massive footprint: Akamai’s network scale brings performance and telemetry advantages.

- Tightly coupled stack: There's good integration with Akamai's other capabilities, such as WAF, caching, and DDoS.

If you’re already on Akamai, this is a practical path to AI bot governance.

Limitations

Balance these in your plan:

- Architecture trade-offs: Deepest capabilities live inside the Akamai ecosystem.

- Evasion is possible: Adaptive bots still probe heuristics and can mimic human-like behavior to evade detection.

- Costs scale with traffic: Traffic-based pricing can be tough for smaller sites.

Despite these limitations, it provides strong edge-based bot detection and mitigation for teams already using Akamai.

5. Netacea

Netacea provides agentless, server-side bot defense focused on behavioral analytics and high-fidelity decisioning. The company highlights extremely low false-positive rates and high efficacy against sophisticated bot automation.

Key Features

Netacea’s design suits teams that avoid heavy client code:

- Agentless/server-side detection across web, apps, and APIs

- Behavioral machine learning (ML) that profiles intent rather than single signals

- Low-latency decisions at scale for high-throughput sites

- Operational visibility for security operations center (SOC) workflows

This approach is handy when client-side sensors face privacy constraints or ad-blocker friction.

How It Can Help Track AI Crawlers

Because Netacea leans into server-side behavior, it’s resilient against crawlers that spoof fingerprints or block client telemetry. Traffic gets clustered by intent. That helps you spot AI retrieval patterns quickly and mitigate them with rate controls.

Strengths

Why teams shortlist Netacea:

- Very low false positives: Netacea claims a 0.001% false-positive rate and 33 times more effective blocking than alternatives.

- Agentless detection: Server-side detection reduces client integration and avoids visible challenges.

- Behavioral and defensive AI: Continuous model updates adapt to evasive tactics that try to mimic humans.

- Wide scale and coverage: Netacea can scale to handle heavy traffic with real-time mitigation.

- Rapid detection and mitigation: This tool is designed for quick signal-to-response loops.

Netacea is great for teams that want server-side certainty and minimal UX surface area.

Limitations

Account for these when you plan rollout:

- Opaque internals: Like many ML-heavy systems, tuning and audit can feel like a black box.

- Lags against brand-new evasion: New AI crawler types may still bypass for a time until models retrain.

- High overhead for smaller teams: Cost and operational lift can exceed what smaller sites can manage.

- Signal quality dependence: Behavior models need traffic breadth. Low volume can limit precision.

With high-volume, high-risk properties, Netacea’s approach maps well to SOC processes.

6. Kasada

Kasada focuses on invisible, dynamic defenses that are hard to reverse-engineer and that act before the page load completes.

Key Features

Kasada’s sweet spot is making the attacker’s job expensive:

- Polymorphic client defenses that mutate on each load

- Real-time interrogation without CAPTCHA

- Threat intel and research published regularly for defenders

- API and mobile coverage with minimal operation burden

These layers are meant to outpace bots’ “bypass kits.”

How It Can Help Track AI Crawlers

Kasada aims to spot the automation itself, not just behavior, which helps when AI crawlers spoof fingerprints or run via hardened headless browsers. Detecting automation signals before rendering completes keeps expensive endpoints and dynamic pages out of reach.

Strengths

Where Kasada performs well:

- Dynamic obfuscation: Polymorphic scripts raise the cost of reverse-engineering for attackers.

- Low friction for real users: Invisible challenges reduce UX noise.

- Pre-render detection lowers origin load: Early blocking reduces compute, bandwidth, and downstream risk.

- Active research and intel sharing: It helps teams stay ahead on threat insights.

That model suits brands that fight persistent data scrapers and scalpers.

Limitations

Here are a few things to weigh:

- Complexity versus manageability: Dynamic client defenses need disciplined change control and quality assurance across devices.

- False positive risks: Aggressive interrogation can backfire if tuned too tightly, creating false positives.

- Privacy tool conflicts: Some client-side signals may clash with privacy tooling or ad blockers, and you’ll need fallbacks.

Kasada is a good fit if you want to stop scraping before it consumes resources.

7. HUMAN

HUMAN Security (formerly White Ops) protects against digital fraud and abuse across advertising, marketing, and e-commerce. It boasts massive telemetry with more than 20 trillion interactions weekly across 3 billion unique devices.

Key Features

Teams pick HUMAN when they need coverage across the full customer journey:

- Large-scale telemetry for high-confidence decisions

- Application bot defense for web, mobile, and APIs

- Ad-fraud and malvertising protection via MediaGuard and related products

- Threat tracking and investigations to follow adversaries over time

It’s a strong option if you need a unified defense and fraud context.

How It Can Help Track AI Crawlers

HUMAN’s large-scale signal graph helps correlate anomalies across industries and device populations, making it easier to detect new AI agents.

Strengths

Practical benefits of HUMAN:

- Top-tier recognition and references: HUMAN regularly appears as a leader in industry assessments.

- Telemetry scale: Billions of devices and trillions of interactions weekly improve anomaly detection.

- Multimodal detection: Combining behavior, device, and threat intel makes simple evasion harder.

This is compelling if your risk spans ads, apps, and APIs across multiple brands or regions.

Limitations

Set expectations around:

- False positives/negatives: At a massive scale, tuning never ends.

- Integration complexity: Integration across wide attack surfaces can take time, depending on your tech stack.

- Higher cost: Enterprise pricing typically targets large properties.

However, for global teams chasing cross-channel integrity, HUMAN’s reach is hard to match.

Turn Bot Traffic Into a Controlled Variable

AI crawlers changed the game by redirecting discovery and normalizing high-speed scraping. So, how should you approach the best bot detection tool selection?

- Start at the edge for quick wins.

- Layer behavioral and device intelligence.

- Choose enforcement that maps to business goals.

- Validate with your traffic.

About the Author

Hannah Hicklen

Content Marketing Manager at Clutch

Hannah Hicklen is a content marketing manager who focuses on creating newsworthy content around tech services, such as software and web development, AI, and cybersecurity. With a background in SEO and editorial content, she now specializes in creating multi-channel marketing strategies that drive engagement, build brand authority, and generate high-quality leads. Hannah leverages data-driven insights and industry trends to craft compelling narratives that resonate with technical and non-technical audiences alike.

See full profile