Updated January 2, 2025

The arrival of ChatGPT in 2022 was a watershed moment in our relationship with artificial intelligence (AI). But is the emergence of generative AI a step too far in the battle to protect our privacy online?

It’s difficult to overestimate the potential that generative AI has. With a global market size set to surpass $200 billion by 2032, and ChatCPT-4’s large language model (LLM) recently scoring in the top 10% of test takers for a simulated US bar exam, the GenAI boom is already gathering momentum at an extraordinary rate.

But while there’s plenty to be excited about when it comes to generative AI, the technology may already be too smart for its own good when it comes to protecting the privacy of its users.

Despite the generative AI revolution being barely one year old, concerns over copyright infringement, inherent bias within generative algorithms, and the emergence of deep fakes and other manipulative false content have already become serious discussion points.

Artificial intelligence may be booming, but is it time we took measures to safeguard our personal information against the rise of the machines?

Privacy is one of the key issues emerging from the growth of generative AI. When GenAI programs and the LLMs that support them aren’t trained with the correct privacy-focused algorithms, they can be vulnerable to several privacy risks and security threats.

Generative AI works by creating or ‘generating’ brand new data for users. This makes it imperative that the data it generates doesn’t contain sensitive information that could pose a risk to individuals.

Because many GenAI models are trained using high volumes of big data obtained from many sources that can contain personal information online, risks can emerge immediately if the AI cannot conform to its programmed privacy policies.

Users are already wising up existing flaws in generative AI chatbots to learn the prompts that are capable of causing LLMs to forget their programming. The recent example of an X developer prompting a car dealership chatbot to offer a 2024 Chevrolet Tahoe for $1 highlights the severe vulnerabilities still present in the technology.

In addition, exfiltration attacks, in which hackers steal training fata from chatbots, could leave cybercriminals with masses of big data that could feature the private information of individuals.

Fortunately, enterprises and regulators alike are wise to the privacy flaws that generative AI can pose for users. Proposals like banning or controlling access to GenAI models, prioritizing synthetic data, and operating private LLMs have become commonplace.

Given the exponential growth we’ve witnessed throughout the generative AI landscape, the only solution will be to discover new ways to live with AI and mitigate its security threats.

At an enterprise level, the most common approach for providers like Google, Microsoft, AWS, and Snowflake is to run LLMs privately on organizational infrastructures.

We can see this in action with Snowflake’s Snowpark Model Registry, which allows users to run an open-source LLM within a container service using a Snowflake account, helping models run using proprietary data.

Meta was quick to confirm that its Llama 2 LLM was trained without using Meta user data.

This helped to quell concerns about using Facebook user information amid a more widespread public distrust in the company’s commitment to privacy.

Despite measures to control the power of generative AI, the race to develop the most efficient LLMs may lead to the hurried launch of new models or cost-cutting by releasing under-trained programs.

The generative AI boom won’t be going away soon, so it’s worth taking a few measures to ensure that your data is as safe as possible when it comes to living alongside GenAI.

All sophisticated large language models should have accessible privacy policies like the ones used by OpenAI’s ChatGPT and Google’s Bard model. Although this reading process can be extensive, it will clarify exactly what information your LLMs use and how they access it.

You can also use privacy policies to understand better what data is at risk and whether you should take extra measures to keep your information safe.

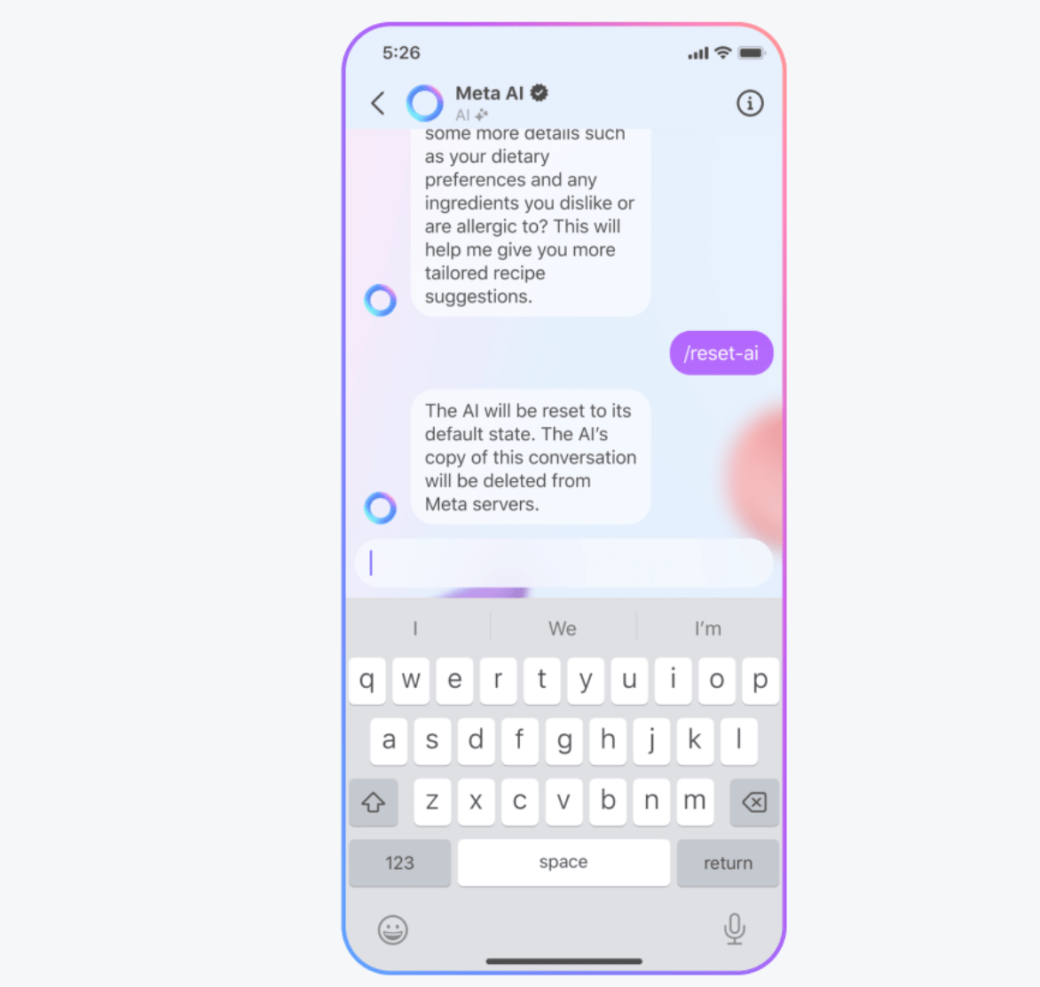

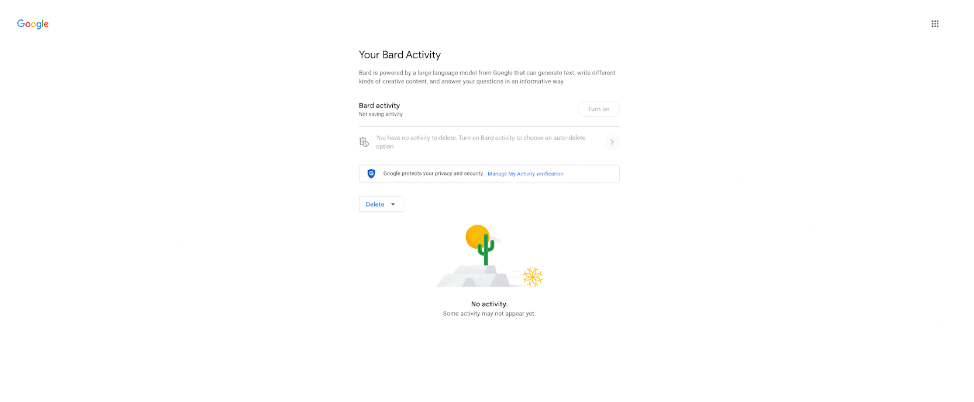

If you’re happy to use your chosen LLMs based on their privacy policies, you can still opt out of using your conversations with models as part of their training process.

This means that anything you overshare won’t be recorded to improve the accuracy of answers to other prompts generative AI programs will receive.

Again, opting out of sharing your activity is easy, with the likes of Google Bard and ChatGPT offering an easy-to-navigate toggle to turn on or off.

The available data used to train generative AI models is hard to keep on top of, and it’s understandable if you want your online privacy to remain protected at all times.

Using a VPN, you can anonymously add a comprehensive layer of privacy to your online browsing and prevent yourself from unconsciously generating mass amounts of data linked to your online profile.

VPNs offer end-to-end encryption that facilitates private online browsing without crawlers, hackers, or surveillance software being capable of tracking your behavior because you’ll be using a server that isn’t linked to your IP address.

The benefits of utilizing a VPN can extend beyond surveillance and bring many advantages when it comes to entertainment and browsing beyond geographical restrictions.

It’s the use of VPNs that have helped to make streaming services like Amazon’s FireStick a globally popular tool for accessing global content.

This is because utilizing a VPN for FireStick has become a widely used way for international viewers to overcome geo-locking for content on major services like Netflix, Amazon Prime, and Disney+, among other services.

It’s through VPNs for streaming services that we can see how the technology doesn’t simply uphold user privacy but also helps deliver a greater volume and quality of content while maintaining an anonymous and secure connection.

The dangers that generative AI poses for children are clear. Although LLMs can be great educational tools for pupils, the risks posed by disinformation and oversharing could lead to a severe security threat for your child or your home.

Be sure to communicate the risks of generative AI to your children, and ensure that they use only trusted generative AI models where possible.

In addition to this, it’s worth checking age-appropriate laws and guidelines for your children’s use of models like ChatGPT. In the UK and California, age-appropriate design code laws require a data protection impact assessment to determine whether children under the age of 18 can access programs that pose privacy risks.

While we’ve covered many of the key privacy concerns over the use of generative AI models, it’s worth remembering that the technology is set to be a force for good around the world in helping us to troubleshoot problems online, design and create content on an unprecedented scale, and automate many repetitive and monotonous tasks.

Privacy will undoubtedly take center stage as the generative AI boom is implemented throughout countless industries. Still, international regulators can expect to help develop a framework that keeps GenAI models productive, helpful, and secure.

The generative AI revolution is gathering momentum, and developing privacy-first models is the key to realizing the full potential of this transformative new technology.