Updated August 19, 2025

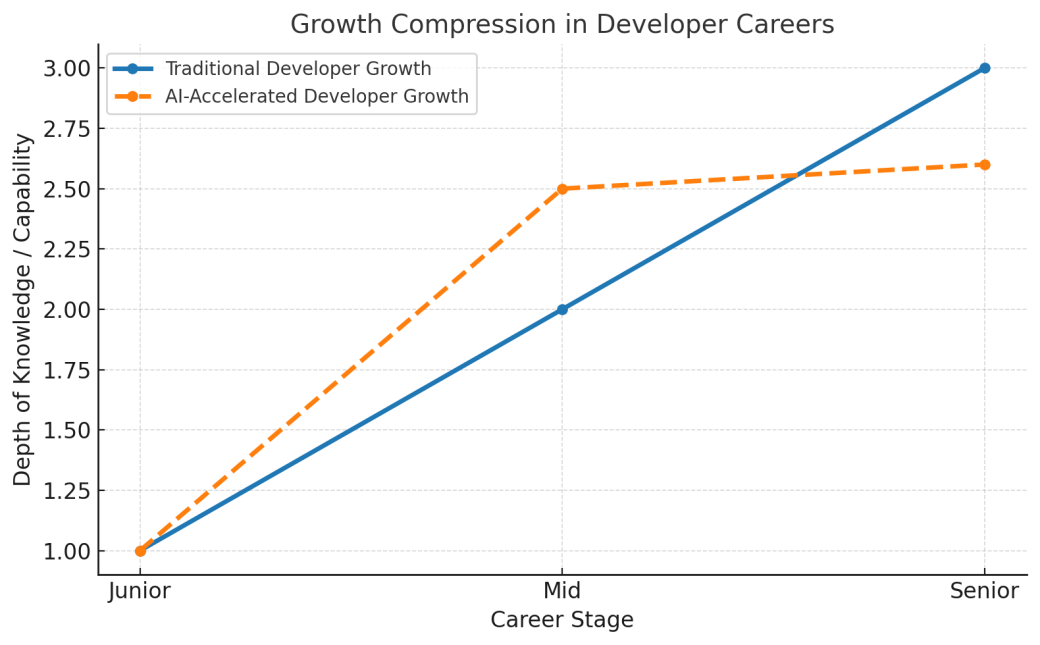

Everyone says AI is accelerating software development. And it is — at least on the surface. But something deeper is shifting inside tech teams: developers are moving faster, while learning less.

Over the past year, teams have been quietly eroding the learning journey that turns beginners into leaders.

Junior developers can now build things they would’ve struggled with even a few years ago. With AI copilots, code suggestion tools, and chat-based debugging, they’re immediately productive. But when asked to explain their choices, retrace their logic, or scale their own solution — the gaps appear.

Looking for a Artificial Intelligence agency?

Compare our list of top Artificial Intelligence companies near you

In a review session with one of our client teams, a junior developer presented a well-structured test suite generated via an AI tool. But when the team started discussing edge cases and failure handling, it became clear that the tests worked — but their purpose wasn’t understood.

This isn’t an isolated case. Across multiple teams, we’ve seen a recurring pattern: developers are skipping over the middle of the learning curve — the messy, iterative part where knowledge is built. We call this growth compression.

Once, I observed a team building a frontend MVP using a prompt-to-code workflow — from a Figma file to a working interface in under 48 hours. The speed was impressive. But when the developers were asked to expand or integrate the component into a broader system, they struggled. The core architecture had been shaped by the AI, not by the team. Without that foundational involvement, tracing logic and adapting the design became far more difficult.

In another case, I watched an AI refactor legacy backend functions. Most of it worked. But a subtle variable change introduced a silent logic bug — the kind that hides until it causes issues in production. It wasn’t about the AI failing. It was about the lack of deep review and contextual understanding on the human side.

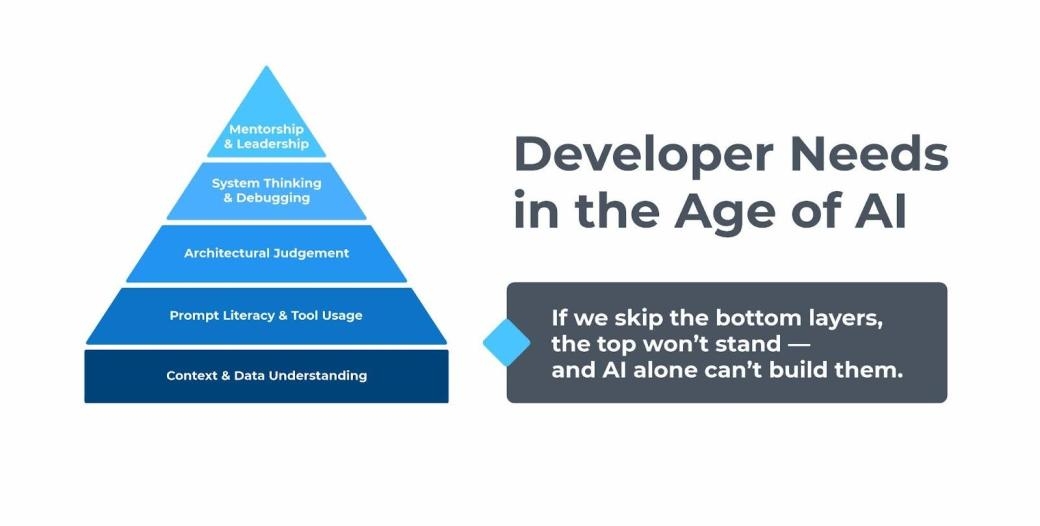

These moments reinforced something important: AI isn’t a passive tool — it’s becoming an active contributor. And like any contributor, it needs supervision, guidance, and sometimes... correction.

Historically, developers grew by debugging, breaking things, writing documentation, and discussing trade-offs. Now, AI often acts as a shortcut. But when engineers skip the painful parts of development — the ones that build intuition — they may reach seniority in title without the experience to match.

This lack of context isn’t just an individual issue. It affects teams. When no one fully understands why something was written a certain way, it becomes harder to refactor, extend, or trust. Decisions cost more, while accountability becomes fuzzier.

So what happens when your codebase needs real change — and no one on the team has the scars to make it?

You get:

One of the most underappreciated consequences of AI adoption is the erosion of junior roles — not in headcount, but in depth. We’ve seen developers start shipping code on day one, thanks to tools like GitHub Copilot or GPT-based assistants. But when the learning curve flattens too early, it doesn’t just limit personal growth — it threatens the future of engineering teams.

Junior roles aren’t just about shadowing seniors or handling simple tickets. They’re where developers:

If those roles become shallow, we won’t end up with stronger mid-levels — just developers who can prompt well but struggle when complexity hits. AI might help them ship today, but it won’t prepare them for scaling, debugging under pressure, or guiding others.

This isn’t just a pipeline problem — it’s a risk to resilience, quality, and innovation. And it’s already showing up in teams that struggle to scale their engineering leadership. The answer isn’t removing junior work. It’s redefining how we teach, grow, and mentor developers in an AI-rich environment.

To prevent AI from quietly eroding the learning journey, we recommend a few practical strategies that we've seen work in real-world settings:

These small shifts can protect long-term capability. They don’t require major restructuring — just deliberate habits that keep understanding in the loop.

The biggest misconception we see is that AI is a plug-and-play solution. It’s not. It behaves more like a new team member — helpful, fast, but inconsistent unless properly guided.

That’s why implementation matters. AI should be introduced with structure, not left to operate in a vacuum. Otherwise, it reinforces shortcuts instead of strengthening systems.

Teams need to build internal alignment around:

When this isn’t clear, AI becomes a crutch. When it is, AI becomes a force multiplier.

It’s tempting to measure AI success by speed alone. But at what cost?

Without human growth, even the most efficient team becomes fragile. We’ve seen developers ship entire components using AI — only to struggle with basic modifications weeks later. That’s not a productivity win. It’s deferred technical debt.

As Gartner reports that 60% of AI projects are expected to fail due to lack of ROI, we believe many of those failures will come from ignoring the human side of software. Good engineering isn't just about tools. It’s about understanding.

In our experience, the long-term value of AI comes from combining it with:

Teams are putting more effort into foundational systems — not just so AI can perform better, but so developers can build with clarity. Our goal isn’t just to ship fast. It’s to grow smart.

We don’t need to eliminate junior roles. We need to rethink them. We need to design them for a world where AI is present — but not dominant.

AI can write your code. But when things go sideways, AI won’t take the heat on the incident call. You will.

Let’s build environments where AI enhances growth, not replaces it. Where developers still gain depth, make decisions, and learn by doing — not just by prompting.

Let’s build the future, not just automate the present.