Updated July 22, 2025

AI adoption by healthcare organizations offers enormous benefits; however, the IT infrastructure may come under pressure. These five principles will guide healthcare CIOs and CTOs through the AI adoption process.

Adopting artificial intelligence (AI) offers enormous potential for healthcare organizations. Embracing AI-powered assistants enables physicians to deliver better care more efficiently, thereby enhancing patient outcomes. At the same time, applying AI can transform operational productivity.

However, AI adoption faces enormous challenges, especially in healthcare.

Looking for a Artificial Intelligence agency?

Compare our list of top Artificial Intelligence companies near you

A recent survey found that two-thirds of enterprise AI initiatives struggled due to infrastructure limitations. Building AI infrastructure in healthcare is even more onerous thanks to privacy and security regulations, not to mention concerns for patient health.

By following these best-practices principles, healthcare enterprises can develop the data infrastructure they need to generate AI’s full potential.

Your operational infrastructure cannot handle AI model development, and even your big data analytics systems lack the right storage and compute architecture.

AI development runs vast amounts of data through iterative, query-intensive processes that your operational infrastructure cannot handle.

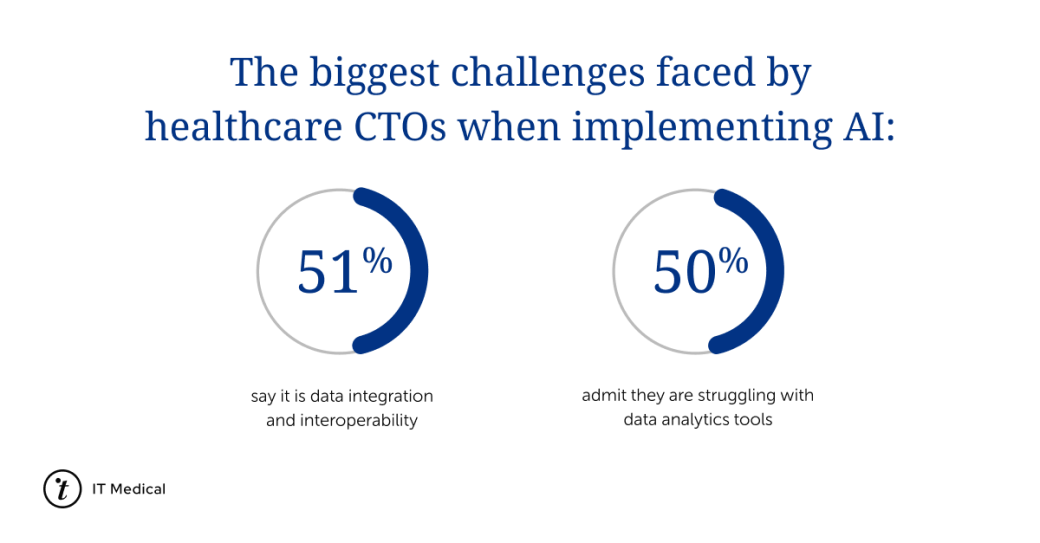

According to a survey, half of healthcare CTOs admit that the biggest challenges faced when implementing AI are data integration and problems integrating data analytics tools.

Healthcare organizations will need a data lake or data warehouse populated with copies of everything stored in their EHR and other operational databases. Ingesting this data in a form that the AI experts can use requires complex extract, transform, and load (ETL) pipelines.

AI models use iterative matrix and TensorFlow calculations at a scale beyond the capacity of a data center’s general-purpose CPUs. Building sophisticated AI models within a reasonable time requires systems designed around specially optimized GPUs interconnected to maximize parallel processing.

Cloud computing platforms like Azure and Google Cloud offer AI development services. They can be appropriate choices for more general AI applications like AI chatbots. More specialized AI applications, especially in healthcare, may require investing in on-premises infrastructure.

An on-premises approach avoids the costs of moving enormous datasets in and out of a cloud platform. Similarly, latency expectations in healthcare favor in-house systems. Governance may also dictate building your system to guarantee data localization and regulatory compliance.

These best practices will help streamline your organization’s journey to AI-driven productivity and patient care.

Success depends on the leadership team’s visible commitment. Lower-level management and frontline workers always greet potentially disruptive changes with skepticism.

Their doubts are even stronger for AI initiatives. People steeped in AI are confident in its benefits. Everyone else shares a very different experience:

The Pew Research Center observed this gap between AI experts and the public. While more than half of the experts surveyed believe AI will positively impact the United States, only seventeen percent of the American public shares that opinion.

Address this skepticism directly by showing clear, consistent leadership and making AI development a collaborative process between your AI team and the people who will ultimately use these new tools. Include stakeholders throughout development, from setting requirements to validating the finished models.

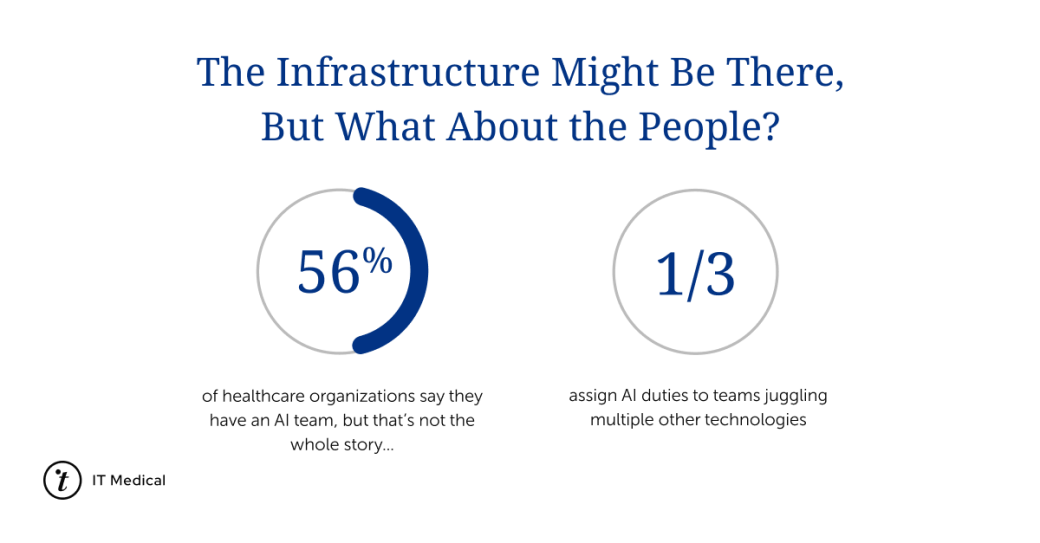

Also, ensure that you create a dedicated AI team to oversee the implementation of artificial intelligence.

Shockingly, 56% of healthcare organizations claim to have an AI team, but one-third assign AI duties to teams that are already juggling multiple other technologies.

You may have already spotted some of the red flags an AI infrastructure raises:

Yet HIPAA, GDPR, ISO 27001, and other frameworks apply just as much to your new AI systems as your existing systems.

In fact, you should apply stricter data privacy and cybersecurity measures. Consolidating vast quantities of patient information into a single repository turns your AI infrastructure into a lucrative target for cybercriminals. And it may be easier to breach than you think.

Least privileged access control policies in your regular IT systems minimize any compromised credential impact. Frontline users can only access data they are authorized to see. On the other hand, your AI team accesses more data and sources than any frontline user. A stolen password can cause a bigger breach. And don’t forget that this risk isn’t limited to people.

The model itself is the ultimate super user, it accesses everything.

Making the data in your AI infrastructure less useful to hackers can mitigate a breach’s impact. Anonymization is a good first step. At a minimum, ETL pipelines should replace patient identifiers while ingesting data from your healthcare databases. Ideally, the pipelines should remove or replace all identity data to minimize the risk of re-identification.

AI implementation is not a one-and-done project. It requires continuous improvement so the model can evolve. As a result, your infrastructure’s resource demands will never go away.

Plan for the inevitable expansion of your storage systems since you’ll train new models on the original data, updates from your data sources, plus new sources. Similarly, you will expand or replace compute resources to leverage the latest AI processing technologies.

Even if building on-premises, don’t rule out a future in the cloud. The AI sector is still ramping up. Services and business practices that are lacking today will become more varied and robust. Design your AI infrastructure to simplify future cloud migrations.

We already discussed how the query-intensive demands of AI development require copying data from healthcare operations into a dedicated repository. Moving data like this creates a risk that ETL pipelines could slightly alter the data feeding model development.

These errors can appear because healthcare databases and transactional systems do not use the same file and table formats as the data lakes and data warehouses at the other end of the pipeline. The differences can be hard to spot if the source or destination systems are proprietary platforms.

What happens when ETL pipelines introduce subtle changes in the training data? Will the model produce correct results?

There’s no way to answer those questions definitively. Your AI team cannot assume their model will work in the wild exactly as it does in development.

Before rolling out the AI system to end users, the team should validate their models quietly. That means connecting the model to operational systems without letting the results enter healthcare decision-making processes. Instead, have your subject matter experts compare the model’s recommendations to the decisions physicians and other users made from the same data.

Basing your new AI solutions on the same interoperability standards your healthcare infrastructure already uses can further minimize risk. Industry standards like HL7’s Fast Healthcare Interoperability Resources (FHIR) or Digital Imaging and Communications in Medicine (DICOM) help ensure data quality when disparate systems exchange data. Integrating these standards into your AI tools also lets your AI teams avoid reinventing the wheel when engineering their data pipelines.

Traditional software development embeds logic in code - if patient X has had a temperature Y for a certain number of days, then an alert is sent.

AI is different. Models assess hundreds or thousands of variables in an internal logic that may be completely opaque to outside observers. For example, whether a doctor is working a day or night shift might influence the model’s recommendations.

One implication of AI’s opacity is how deviations from the model’s training conditions could impact its performance. The model’s training data is a snapshot in time, but any aspect of hospital operations can change over time. For example:

Inevitably, the AI system will become less reliable as actual practice drifts from the original conditions.

Your AI team must continuously monitor the system’s performance, gather user feedback, and capture changes in practice to feed into the training data for the model’s next iteration.

Several trusted companies develop custom healthcare AI solutions, from virtual assistants that reduce wait times and improve patient satisfaction to predictive analytics solutions that harness petabytes of data to support personalized medicine.