Updated December 18, 2025

AI agents and LLMs are already shaping what does and doesn’t get seen online. They scan, summarize, and filter information before a user even clicks. That means in a world increasingly powered by automation, your website and content might be invisible if it isn’t optimized for machine readers. This article explores the rise of agentic web optimization, why it matters, what’s changing, and how to prepare your site for visibility in the age of AI.

AI tools and search engines summarize your site, make choices without your input, and direct traffic elsewhere. SEO is more important than ever and continues to evolve.

Many marketers may still be focusing on rankings or traffic. They could be adjusting meta tags, rewriting introductions, testing page speed, and auditing internal links. These tasks are still critical, but there are additional opportunities when optimizing for AI agents or LLMs.

Looking for a SEO agency?

Compare our list of top SEO companies near you

The landscape has changed. LLMs process your content in ways that traditional SEO doesn’t. Perplexity reveals competitors who organize content clearly. ChatGPT bypasses cluttered pages and pulls summaries from other sources or unique passages from given pages. Google's AI Overviews condense your long-form content into short paragraphs or just brand mentions and direct users to a listing page you can't control.

This trend is not on the horizon; it is already here, and it’s been here for a while.

If autonomous agents don’t process your site, you may not be slipping in traditional SERP rankings, but you could be excluded from the decision-making process many people use. Enter LLMs…

I see a lot of chatter on LinkedIn where I believe people are getting this wrong. Some folks think SEO is dead, but in my opinion, and based on my experience, it's not; in fact, it's far from it.

A strong SEO foundation is now the baseline requirement. To appear in LLMs, your website needs a clean structure, clear meaning, crawlable content, and a consistent focus on topics within your industry. This makes your site usable for both humans and LLMs/Agents. If you want to appear in LLM responses, you should NOT skip traditional SEO.

If your content cannot be crawled, it cannot be summarized. If your structure is poor, it can't be cited. If your brand lacks fresh reviews, it loses trust. (This is especially true in my experience with agentic web optimization.)

AI agents seek usable, clear, and verifiable content. Effective SEO is essential; it’s still the foundation. This is even more important when LLMs use search in their outputs.

The person behind the screen isn’t doing the research; LLM agents are based on the user's prompts.

AI systems browse, filter, compare, and summarize content to help users find answers and act faster. You are writing not just for people but for the systems that determine which content to display.

These systems do not act like people. They don’t scroll, explore your navigation, or guess your intent. However, they do make up a lot of random nonsense from time to time. This is often referred to in our industry as hallucinations.

They scan for structure, clarity, and content that expresses intent. If your content doesn’t deliver this right away, they move on without notice. You will lose visibility and might not understand why.

These systems want structured and clear content. Use straightforward headlines, concise summaries, a semantic approach, and machine-readable markup such as structured data.

If your site resembles a brochure full of generic headlines and big “walls” of text-only content, software looking for clarity may not utilize it.

Here’s an example of a web page that is basically just a “wall of text,” and you know what? Not only does it not work well in traditional SEO, but it most certainly does not help this brand get discovered by LLM agents.

If your website buries its most important value under visual clutter, it may not be processed by any agentic system. In my experience, these agentic platforms prioritize information (passages) over visuals. According to ChatGPT 4o, agents focus on clarity, authority, structure, and freshness. Structure also includes structured data markup.

Most good websites are designed for human browsing. AI systems do not browse; they act, simulate decisions, filter, and route.

If the answer isn't yes to all these questions, your website has clear SEO opportunities that will feed AI agents.

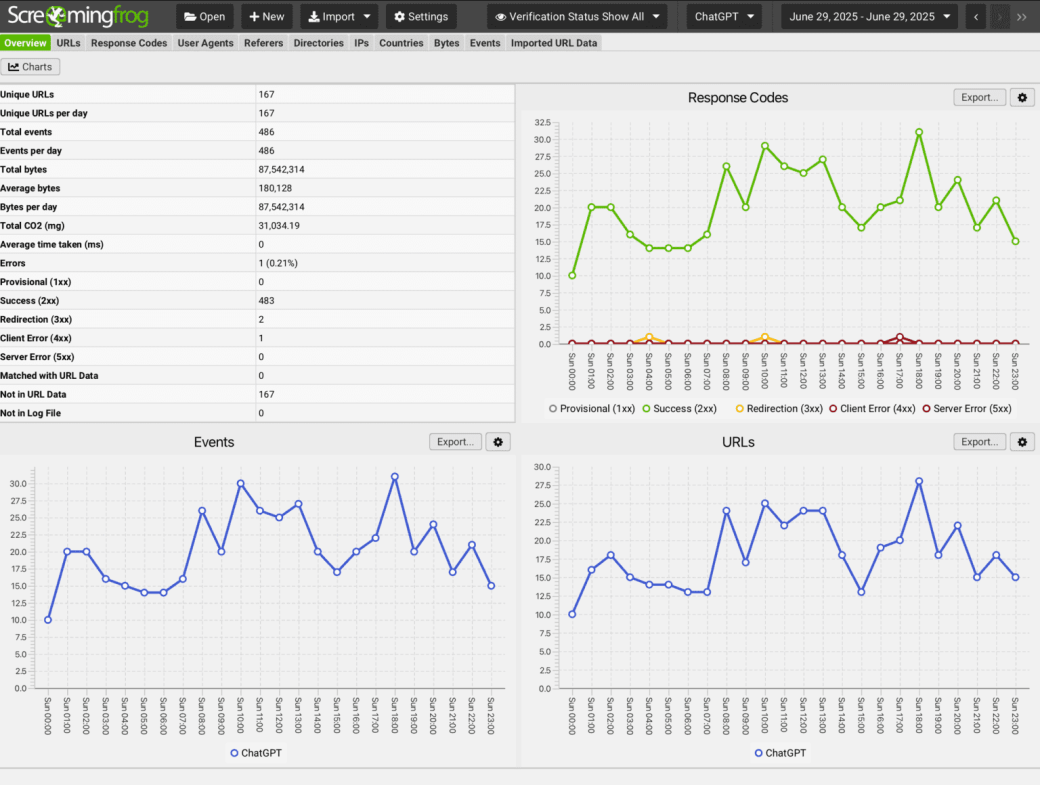

Analytics can be misleading. You might check CTR, bounce rate, or scroll depth and think everything is fine, but these metrics were designed to measure human behavior. Not AI agents, though there are some workarounds with server log reviews and tools like ScreamingFrog’s Log File Analyzer.

AI agents don’t click, scroll, or fill out forms. They evaluate your page, extract what they need, and move on.

You don’t need to overhaul your entire site. If you have invested in SEO, you are in a good position. However, you must refine your structure and rethink how each page communicates intent.

You are no longer just optimizing for rankings but for LLM inclusion. You want to be part of the dataset that AI agents use to form answers. You want to be selected, not just indexed.

SEO is not obsolete; it's essential. Without it, you likely won't even be considered.

You can't gain visibility in LLMs without making your content crawlable, trustworthy, and machine-readable. Accuracy is important, but LLMs crave your personal point of view and experience.

Now, discoverability appeals to two audiences: humans and machines. Machines determine what humans see.

Many of your competitors are likely not considering this yet. They still focus on optimizing blog posts for long-tail keywords, hoping for rankings that more often than not attract clicks.

This is your opportunity. If you shift focus now, you won't just maintain your position; you'll gain ground while your competitors fall behind without noticing.

For the most part, Google algo changes are rolled out slowly over a 2-4 week period. Over the last 2-3 years, however, these updates have become much more robust and roll out much faster. LLM agents, on the other hand, have evolved rapidly over the course of just the last two months and will continue to evolve.

Agents are already crawling your content. AI systems are influencing visibility. The shift is live here and will continue to evolve very quickly.

If your site isn't built for agentic use, it won't be effective for agentic users, and the adoption of LLM usage is growing rapidly. And when I say, rapidly, it’s probably the understatement of the century. Take a look at this data:

According to an Elon University survey, in the U.S., around 52% of adults report using LLMs like ChatGPT, Gemini, or Claude by March 2025.

The window to adapt to LLMs is still open, but it’s closing fast. The sooner you align your web strategy with agentic expectations, the more likely it is that your content will stand out in an AI-shaped digital landscape.

Agentic web optimization is the next step in our approach to web development and search optimization. The rise of autonomous agents and AI systems means your digital presence must now serve two audiences: human users and the intelligent agents shaping digital interactions. This change is not just about keeping up with search algorithms; it’s about creating a user experience that is accessible, actionable, and valuable to both humans and machines.

AI tools and agents are now part of everyday digital life. The rules of visibility have changed. Top search engine results still matter A LOT, but they aren’t the only way to gain visibility.

Agentic web optimization involves ongoing improvement, constantly refining your digital strategy to ensure your content is not just found but chosen and highlighted by AI systems. In this environment, staying visible means embracing the agentic web and optimizing for the intelligent agents that shape visibility.

Artificial intelligence is the driving force behind modern web optimization. AI agents and autonomous systems analyze complex tasks, interpret user intent, and execute actions with staggering precision, reshaping digital visibility. By utilizing large language models and machine learning, these intelligent systems generate insights beyond what traditional analytics provide.

For businesses, this means technical SEO is no longer solely about information architecture or JavaScript rendering, although those are critical. It’s about structuring your site so that both human users and AI agents can navigate it smoothly. AI-driven optimization uses performance metrics to find what works, adapting content and site design in real-time.

The outcome? Websites that are more responsive to user needs and more appealing to the AI systems determining which content rises to the top. Adopting AI in your optimization strategy is crucial for remaining relevant and competitive in a world where machines control digital interactions.

Autonomous agents like AI chatbots, summarizers, and task runners are changing how people access and act on information online. These systems can generate responses, complete tasks, and interact with users or other agents, often without much human input. They work best when fed clean, structured data and backed by solid models that get updated regularly. As more of them get built into search engines and platforms, they’re deciding what content gets seen and what gets ignored.

Multi-agent systems take this further by enabling groups of agents to collaborate and achieve complex goals that a single agent cannot tackle alone.

For businesses, this means your website must cater to more than just human visitors; it needs to be optimized for agents anticipating clear, structured information and the ability to perform tasks seamlessly. The more your site accommodates these autonomous agents through structured data, actionable elements, and solid analytics, the more likely you are to maintain visibility and relevance in the advancing digital environment.

AI platforms are the backbone of agentic web optimization, providing the infrastructure that enables autonomous agents to function at scale. These platforms often employ specialized agents tailored to specific tasks, from content management to automated content generation.

By integrating AI platforms with your content management systems and digital tools, you harness the full potential of agentic AI, streamlining workflows, refining your digital strategy, and enhancing your search rankings.

The true power of these platforms lies in their ability to coordinate multiple agents, ensuring your site is continually optimized for human users and the agentic systems driving discovery. As the agentic web evolves, leveraging AI platforms is increasingly strategic. They allow you to adapt quickly, implement advanced optimization techniques, and maintain a competitive edge in search results that intelligent agents increasingly curate.

Agentic AI and autonomous agents have transformed the rules of search engine optimization. While traditional SEO is focused on pleasing human users and search engines, agentic SEO requires a different approach: optimizing for the AI agents that now filter, summarize, and recommend content. This involves adopting natural language processing to ensure your content is contextually relevant and high-quality and implementing model context protocols so agents can communicate effectively with your site.

Traditional SEO is still critical and includes:

They form the foundation for LLM agents to understand, interact with, and trust your content. Agent optimization is about making your site not just visible but also usable and actionable for intelligent systems.

As the agentic web becomes commonplace, businesses that adjust their SEO strategies to prioritize AI-driven interactions will retain visibility, authority, and high search rankings in a rapidly changing digital landscape.